How Container Filesystem Works: Building a Docker-like Container From Scratch

One of the superpowers of containers is their isolated filesystem view -

from inside a container it can look like a full Linux distro, often different from the host.

Run docker run nginx, and Nginx lands in its familiar Debian userspace no matter what Linux flavor your host runs.

But how is that illusion built?

In this post, we'll assemble a tiny but realistic, Docker-like container using only stock Linux tools: unshare, mount, and pivot_root.

No runtime magic and (almost) no cut corners.

Along the way, you'll see why the mount namespace is the bedrock of container isolation,

while other namespaces, such as PID, cgroup, UTS, and even network, play rather complementary roles.

By the end - especially if you pair this with the container networking tutorial - you'll be able to spin up fully featured, Docker-style containers using nothing but standard Linux commands. The ultimate goal of every aspiring container guru.

Prerequisites

- Some prior familiarity with Docker (or Podman, or the like) containers

- Basic Linux knowledge (shell scripting, general namespace awareness)

- Filesystem fundamentals (single directory hierarchy, mount table, bind mount, etc.)

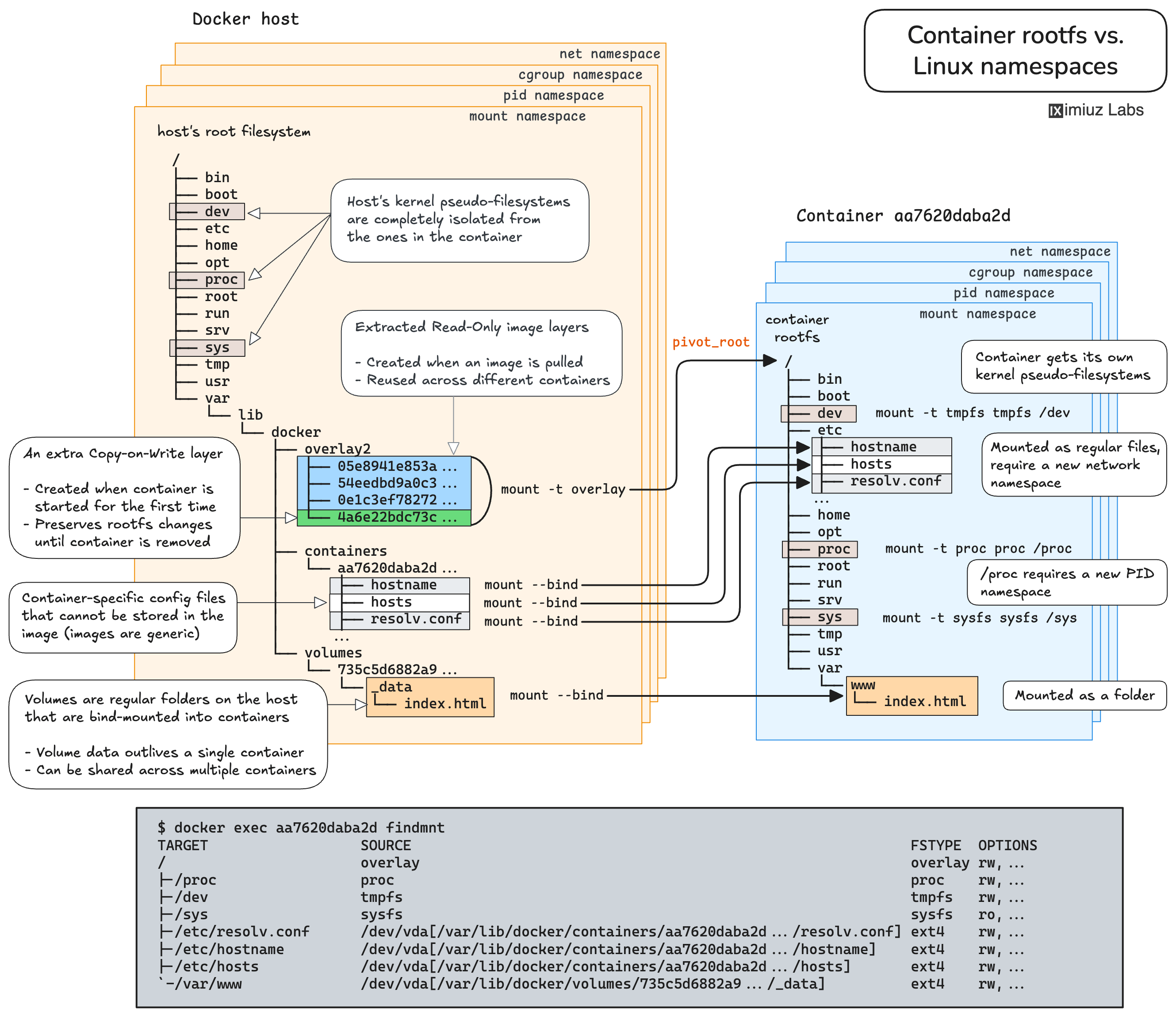

Visualizing the end result

The diagram below shows what filesystem isolation looks like when Docker creates a new container. It's all right if the drawing feels overwhelming. With the help of the hands-on exercises in this tutorial, we'll build a comprehensive mental model of how containers work, so when we revisit the diagram in the closing section, it'll look much more digestible.

Click to enlarge

What exactly does Mount Namespace isolate?

Let's do a quick experiment. In Terminal 1, start a new shell session in its own mount namespace:

sudo unshare --mount bash

Now in Terminal 2, create a file somewhere on the host's filesystem:

echo "Hello from host's mount namespace" | sudo tee /opt/marker.txt

Surprisingly or not, when you try locating this file in the newly created mount namespace using the Terminal 1 tab, it'll be there:

cat /opt/marker.txt

So what exactly did we just isolate with unshare --mount? 🤔

The answer is - a mount table. Here is how to verify it. From Terminal 1, mount something:

sudo mount --bind /tmp /mnt

💡 The above command uses a bind mount for simplicity, but a regular mount (of a block device) would do, too.

Now if you list the contents of the /mnt folder in Terminal 1,

you should see the files of the /tmp folder:

ls -l /mnt

total 12

drwx------ 3 root root 4096 Sep 11 14:16 file1

drwx------ 3 root root 4096 Sep 11 14:16 file2

...

But at the same time, the /mnt folder remained empty in the host mount namespace.

If you run the same ls command from Terminal 2,

you'll see no files:

ls -lah /mnt

total 0

Finally, the filesystem "views" started diverging between namespaces. However, we could only achieve it by creating a new mount point.

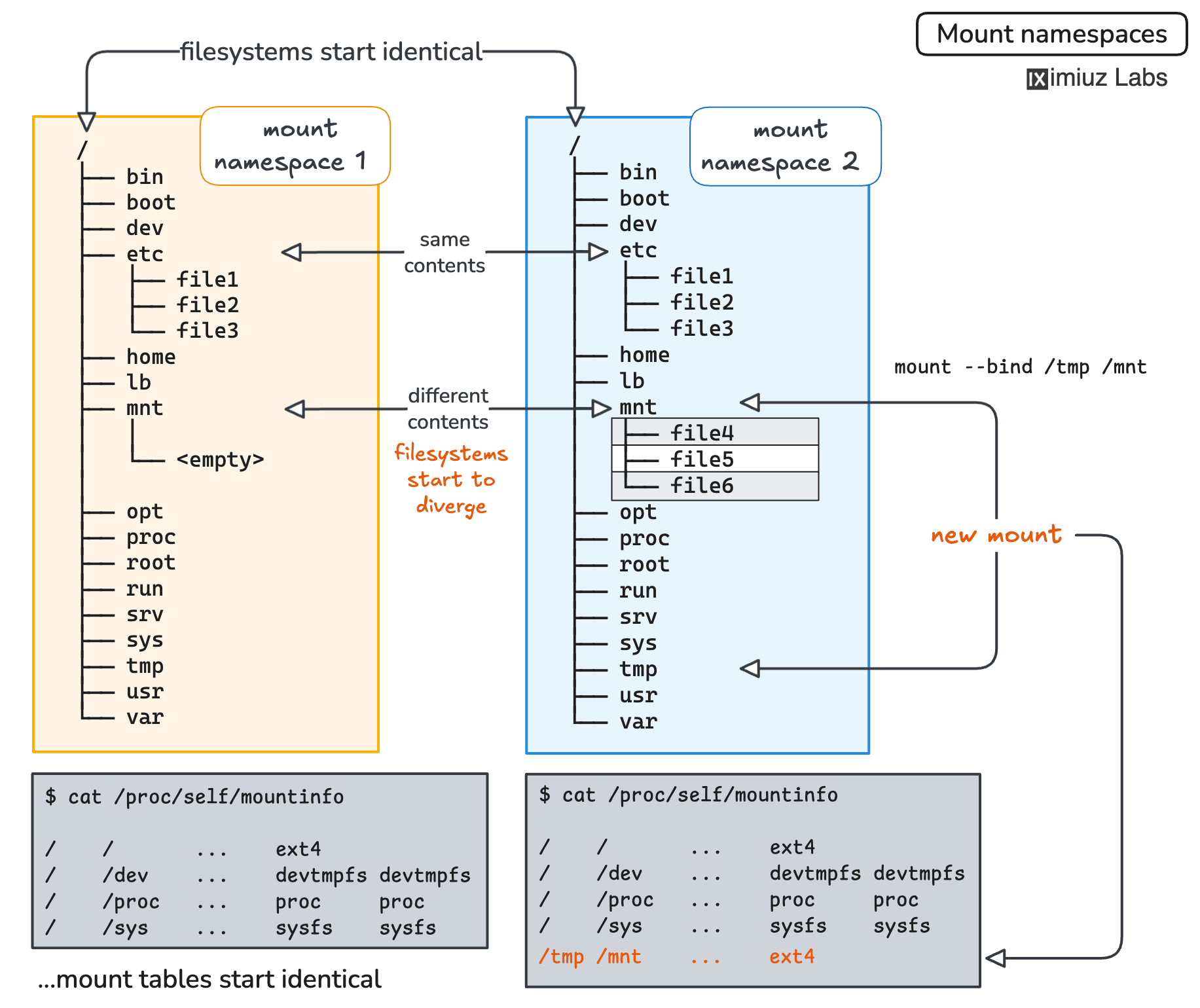

Mount namespaces, visualized

From the mount namespace man page:

Mount namespaces provide isolation of the list of mounts seen by the processes in each namespace instance. Thus, the processes in each of the mount namespace instances will see distinct single directory hierarchies.

Compare the mount tables by running findmnt from Terminal 1 and Terminal 2:

TARGET SOURCE FSTYPE OPTIONS

/ /dev/vda ext4 rw,...

├─/dev devtmpfs devtmpfs rw,...

│ ├─/dev/shm tmpfs tmpfs rw,...

│ ├─/dev/pts devpts devpts rw,...

│ └─/dev/mqueue mqueue mqueue rw,...

├─/proc proc proc rw,...

├─/sys sysfs sysfs rw,...

│ ├─/sys/kernel/security securityfs securityfs rw,...

│ ├─/sys/fs/cgroup cgroup2 cgroup2 rw,...

│ ...

└─/run tmpfs tmpfs rw,...

├─/run/lock tmpfs tmpfs rw,...

└─/run/user/1001 tmpfs tmpfs rw,...

In hindsight, it should probably make sense - after all, we are playing with a mount namespace (and there is no such thing as filesystem namespaces, for better or worse).

💡 Interesting fact: Mount namespaces were the first namespace type added to Linux, appearing in Linux 2.4, ca. 2002.

💡 Pro Tip: You can quickly check the current mount namespace of a process using the following command:

readlink /proc/$PID/ns/mnt

Different inode numbers in the output will indicate different namespaces.

Try running readlink /proc/self/ns/mnt from Terminal 1 and Terminal 2.

What the heck is Mount Propagation?

Before we jump to how exactly mount namespaces are applied by Docker an OCI runtime

(e.g., runc) to create containers,

we need to learn about one more important (and related) concept - mount propagation.

⚠️ Make sure to exit the namespaced shell in Terminal 1 before proceeding with the commands in this section.

If you tried to re-do the experiment from the previous section using the unshare() system call instead of the unshare CLI command,

the results might look different.

package main

import "os"

import "os/exec"

import "syscall"

func main() {

if err := syscall.Unshare(syscall.CLONE_NEWNS); err != nil {

panic(err)

}

cmd := exec.Command("bash")

cmd.Stdin = os.Stdin

cmd.Stdout = os.Stdout

cmd.Stderr = os.Stderr

cmd.Env = os.Environ()

cmd.Run()

}

Premium Materials

Official Content Pack required

This platform is funded entirely by the community. Please consider supporting iximiuz Labs by upgrading your membership to unlock access to this and all other learning materials in the Official Collection.

Support Development