Coding Agent Base Playground

Get a disposable agentic coding environment in seconds. Claude Code, Gemini CLI, OpenAI Codex, Opencode, and Plandex pre-installed and ready to use.

What is This and Why?

This playground gives you a quick way to clone any GitHub repository into a preconfigured coding environment and unleash the full power of coding agents on it without being afraid of damaging your host OS or compromising your sensitive local data.

⚠️ Warning: This playground is meant to be used only with public repositories and non-critical API keys. However, this restriction is not specific to the playground - this should already be a requirement, given that you're trusting your code and/or access keys to a potentially vulnerable coding agent.

Quick Start

- Enter a repository name (optionally)

- Click Start Playground (or use

labctl playground start --ssh coding-agent-base) - The login shell will end up in the

$HOME/workspace - Fire up your favorite agent in the YOLO mode 🙈

Example:

# Authenticate to Claude API

claude setup-token

# Try out an ad hoc prompt

claude -p "what does this project do?"

# Add any number of MCP servers

claude mcp add postgres-server

# Run a coding session with the YOLO mode on

claude --dangerously-skip-permissions

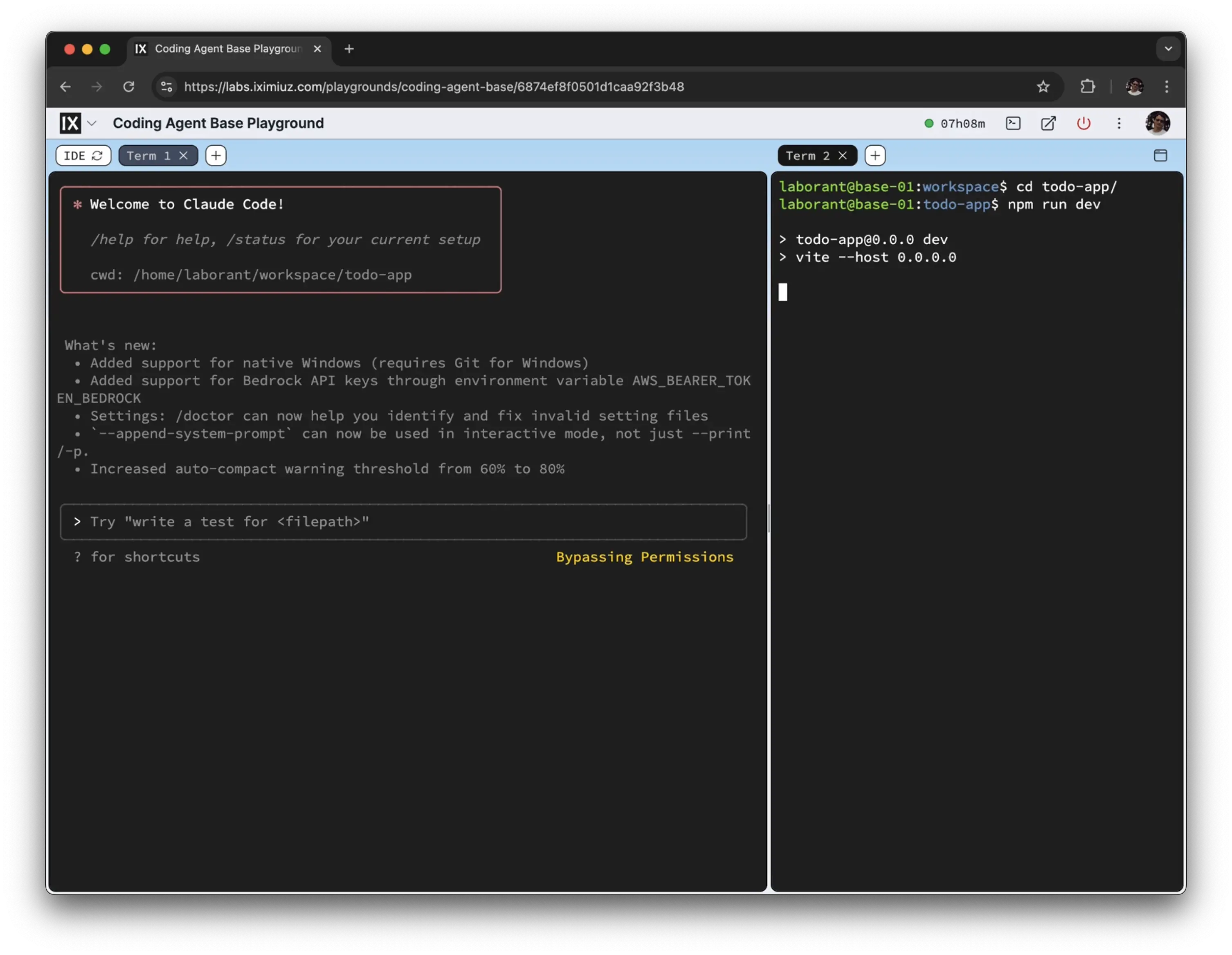

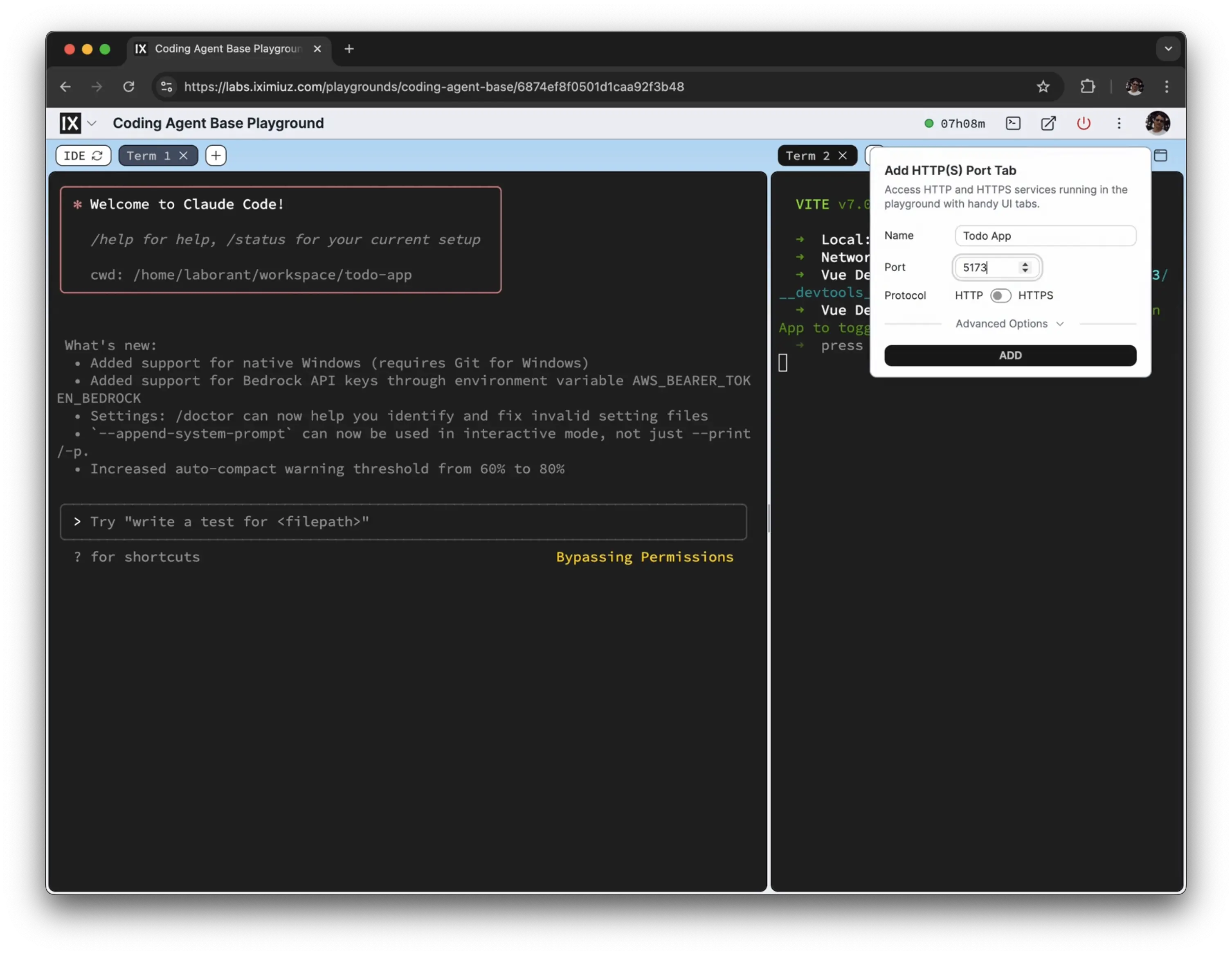

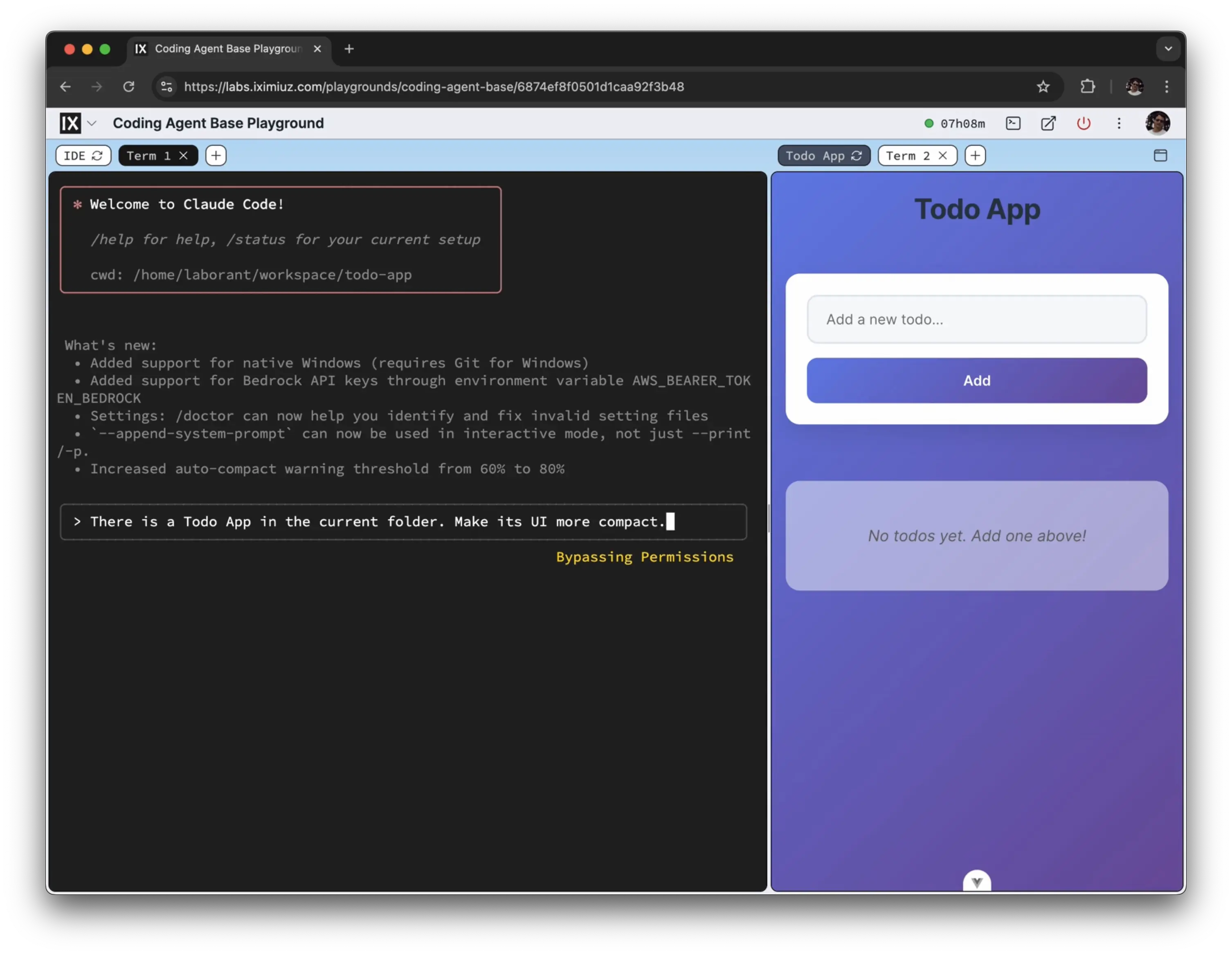

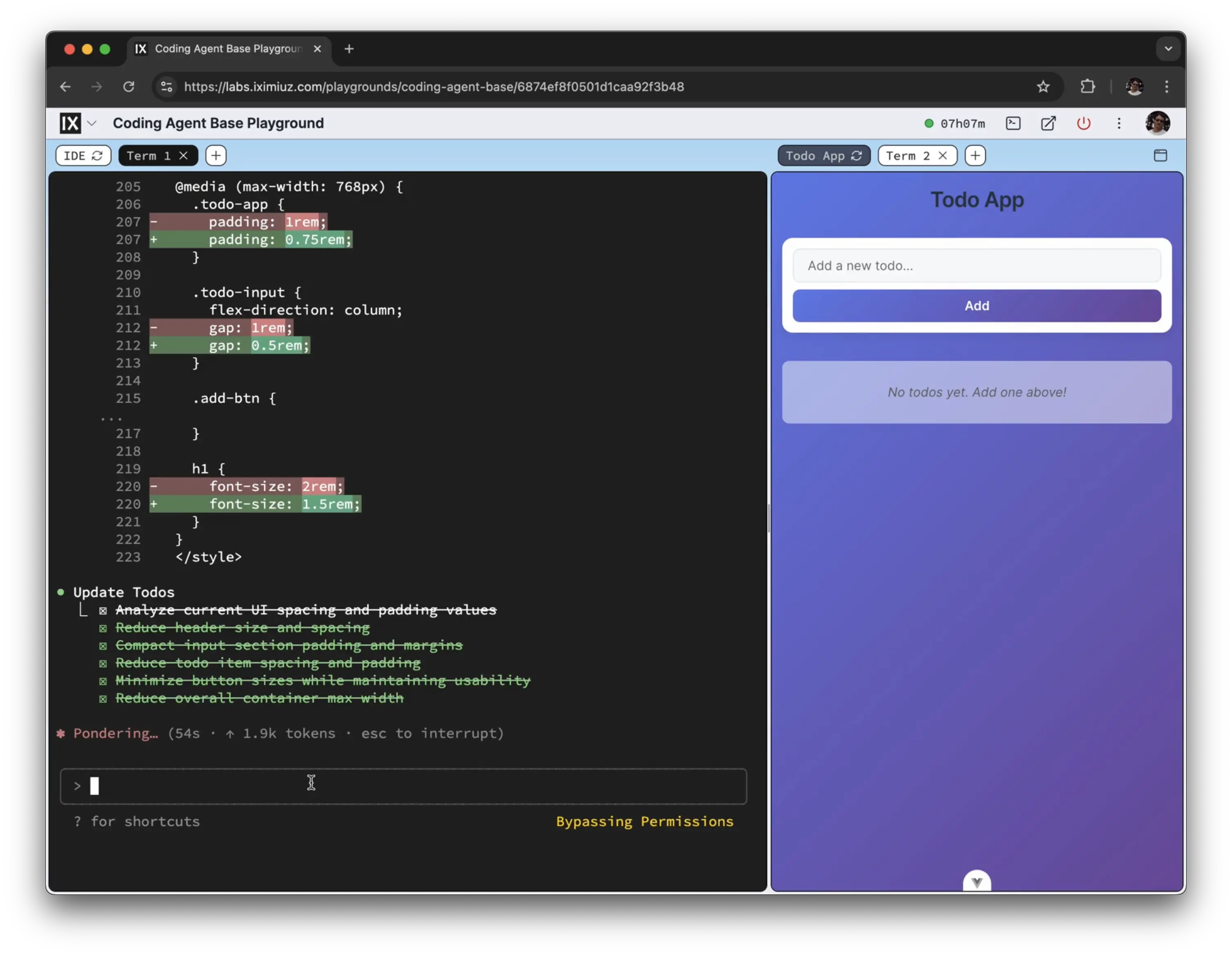

If you're developing a web application, ask the agent to start a dev server on 0.0.0.0:3000,

and use a split view (cmd + \) with a web tab on the right.

Familiar Developer Experience

The playground provides tools all developers are most familiar with:

- A full-fledged Linux VM with Docker preinstalled

- Terminal access with tabs and split view support

- A dynamically reloaded "web page" sidebar to review changes

- A built-in web IDE (VS Code) for fine-tuning generated code

- Access from a local terminal via

labctl ssh - Exposing the work with a public (or authentication-protected) URL

- Sharing playground terminal sessions with colleagues

- Coming soon - Snapshot and recover the results

The above capabilities should make development in the playground as convenient as running agents locally. At the same time, the remote nature of the playground allows for publishing results and sharing access, while the fully isolated VM sandbox should provide additional peace of mind.

Supported Application Stacks

At the moment, the following language runtimes and the corresponding development tools are preinstalled (but we're happy to add more upon request):

- Python

- Node.js

- Go

Agents

Enjoy all SOTA coding agents and a couple of contenders. Mix, match, and combine them at your own risk 🧪

| Agent | Command | Repository | Getting Started |

|---|---|---|---|

| Claude Code | claude | - | Quickstart |

| OpenAI Codex CLI | codex | openai/codex | OpenAI Codex Documentation |

| Gemini CLI | gemini | google-gemini/gemini-cli | Gemini CLI Documentation |

| GitHub Copilot CLI | copilot | github/copilot-cli | About GitHub Copilot CLI |

| OpenHands | openhands | All-Hands-AI/OpenHands | Running OpenHands locally |

| Opencode | opencode | sst/opencode | Opencode Documentation |

| Aider | aider | Aider-AI/aider | Usage Guide |

| Plandex | plandex | plandex-ai/plandex | Quick Start Guide |

| Qwen Code | qwen-code | QwenLM/qwen-code | Quick Start |

| Crush | crush | charmbracelet/crush | Getting Started |

| Cline | (via web IDE plugin) | cline/cline | Getting Started |

More LLM Tools

The playground also has a few general-purpose LLM tools in it.

Ollama

Ollama allows you to run large language models locally. It's great for experimenting with different models without sending your code to external services.

To get started with Ollama:

# Start the Ollama server

ollama serve

# Pull a model and start using it

ollama pull llama3.2:latest

ollama run llama3.2:latest

LLM (simonw/llm)

llm is a command-line tool and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine.

To use it with Ollama:

# Use it with local models (assuming you ollama pull'ed llama3.2:latest)

llm -m llama3.2:latest 'What is the capital of France?'

# Or with remote APIs (requires API key configuration)

llm keys set openai

llm -m gpt-4 'Explain how async/await works in JavaScript'

You can also use llm to manage different model configurations and maintain conversation history across multiple interactions.

MCP Tools (f/mcptools)

mcptools is a command-line tool for interacting with MCP (Model Context Protocol) servers using both stdio and HTTP transport.

Example:

# List all available tools from a filesystem server

mcptools tools \

npx -y @modelcontextprotocol/server-filesystem ~

# Call a specific tool

mcptools call read_file \

--params '{"path":"README.md"}' \

npx -y @modelcontextprotocol/server-filesystem ~

# Open an interactive shell

mcptools shell \

npx -y @modelcontextprotocol/server-filesystem ~

Coding Agent Base Playground

Get a disposable agentic coding environment in seconds. Claude Code, Gemini CLI, OpenAI Codex, Opencode, and Plandex pre-installed and ready to use.

What is This and Why?

This playground gives you a quick way to clone any GitHub repository into a preconfigured coding environment and unleash the full power of coding agents on it without being afraid of damaging your host OS or compromising your sensitive local data.

⚠️ Warning: This playground is meant to be used only with public repositories and non-critical API keys. However, this restriction is not specific to the playground - this should already be a requirement, given that you're trusting your code and/or access keys to a potentially vulnerable coding agent.

Quick Start

- Enter a repository name (optionally)

- Click Start Playground (or use

labctl playground start --ssh coding-agent-base) - The login shell will end up in the

$HOME/workspace - Fire up your favorite agent in the YOLO mode 🙈

Example:

# Authenticate to Claude API

claude setup-token

# Try out an ad hoc prompt

claude -p "what does this project do?"

# Add any number of MCP servers

claude mcp add postgres-server

# Run a coding session with the YOLO mode on

claude --dangerously-skip-permissions

If you're developing a web application, ask the agent to start a dev server on 0.0.0.0:3000,

and use a split view (cmd + \) with a web tab on the right.

Familiar Developer Experience

The playground provides tools all developers are most familiar with:

- A full-fledged Linux VM with Docker preinstalled

- Terminal access with tabs and split view support

- A dynamically reloaded "web page" sidebar to review changes

- A built-in web IDE (VS Code) for fine-tuning generated code

- Access from a local terminal via

labctl ssh - Exposing the work with a public (or authentication-protected) URL

- Sharing playground terminal sessions with colleagues

- Coming soon - Snapshot and recover the results

The above capabilities should make development in the playground as convenient as running agents locally. At the same time, the remote nature of the playground allows for publishing results and sharing access, while the fully isolated VM sandbox should provide additional peace of mind.

Supported Application Stacks

At the moment, the following language runtimes and the corresponding development tools are preinstalled (but we're happy to add more upon request):

- Python

- Node.js

- Go

Agents

Enjoy all SOTA coding agents and a couple of contenders. Mix, match, and combine them at your own risk 🧪

| Agent | Command | Repository | Getting Started |

|---|---|---|---|

| Claude Code | claude | - | Quickstart |

| OpenAI Codex CLI | codex | openai/codex | OpenAI Codex Documentation |

| Gemini CLI | gemini | google-gemini/gemini-cli | Gemini CLI Documentation |

| GitHub Copilot CLI | copilot | github/copilot-cli | About GitHub Copilot CLI |

| OpenHands | openhands | All-Hands-AI/OpenHands | Running OpenHands locally |

| Opencode | opencode | sst/opencode | Opencode Documentation |

| Aider | aider | Aider-AI/aider | Usage Guide |

| Plandex | plandex | plandex-ai/plandex | Quick Start Guide |

| Qwen Code | qwen-code | QwenLM/qwen-code | Quick Start |

| Crush | crush | charmbracelet/crush | Getting Started |

| Cline | (via web IDE plugin) | cline/cline | Getting Started |

More LLM Tools

The playground also has a few general-purpose LLM tools in it.

Ollama

Ollama allows you to run large language models locally. It's great for experimenting with different models without sending your code to external services.

To get started with Ollama:

# Start the Ollama server

ollama serve

# Pull a model and start using it

ollama pull llama3.2:latest

ollama run llama3.2:latest

LLM (simonw/llm)

llm is a command-line tool and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine.

To use it with Ollama:

# Use it with local models (assuming you ollama pull'ed llama3.2:latest)

llm -m llama3.2:latest 'What is the capital of France?'

# Or with remote APIs (requires API key configuration)

llm keys set openai

llm -m gpt-4 'Explain how async/await works in JavaScript'

You can also use llm to manage different model configurations and maintain conversation history across multiple interactions.

MCP Tools (f/mcptools)

mcptools is a command-line tool for interacting with MCP (Model Context Protocol) servers using both stdio and HTTP transport.

Example:

# List all available tools from a filesystem server

mcptools tools \

npx -y @modelcontextprotocol/server-filesystem ~

# Call a specific tool

mcptools call read_file \

--params '{"path":"README.md"}' \

npx -y @modelcontextprotocol/server-filesystem ~

# Open an interactive shell

mcptools shell \

npx -y @modelcontextprotocol/server-filesystem ~